Free and self-hosted AI Chatbot built on Ollama, Lllama3 LLM model and OpenWebUI interface.

Personal chatbot powered by Ollama, an open source large language model Lllama3 and OpenWebUI interface running on your own server.

Rent a virtual (VPS) or a dedicated server from HOSTKEY with a pre-installed and ready-to-use Self-hosted AI Chatbot, which can process your data and documents using various LLM models.

You can upload the most recent versions of Phi3, Mistral, Gemma, and Code Llama models.

Servers available in the Netherlands, Finland, Germany and Iceland.

AI Chatbot running on a HOSTKEY-shared GPU server. You get the admin rights to use AI Chatbot privately and manage your team.

AI Chatbot pre-installed on your own VPS or Dedicated GPU server. You get the full admin rights over your personal server.

With every AI Chatbot plan, you will get pre-installed and ready-to-use models:

“Self-hosted AI Chatbot” plan lets you manage and load more models. This feature is not available for the “AI Chatbot Lite” plan.

AI Chatbot Lite - a paid trial tier of the main product

AI Chatbot Lite runs on a HOSTKEY-shared GPU server. This is a paid trial tier of the main product (Self-hosted AI Chatbot). The payment is taken for using a shared HOSTKEY-managed GPU server. AI Chatbot Lite offers basic chatbot functionality with no limits on the number of users or prompt requests. It makes AI Chatbot Lite a perfect trial plan before purchasing the Self-hosted AI Chatbot (main product).

An AI chatbot hosted on your own server ensures security, with all data stored and processed within your environment.

OpenWebUI allows you to use multiple models in a single workspace, speeding up workflow and enabling you to process a single prompt across several models simultaneously without switching windows. Just make one request and visually compare the results to select the best option.

Hosting an AI Chatbot on your own server allows you to conduct code reviews preventing the risk of code leakage and protecting corporate confidentiality. The scalability of a self-hosted solution is very useful for handling increased workloads – you can increase a server capacity as needed.

Connect an AI chatbot to your knowledge base to generate answers to frequently asked questions from your users or employees.

Analyze corporate documents in various formats, such as pdf, csv, rst, xml, md, epub, doc, docx, xls, xlsx, ppt, and txt, while keeping all analytics on your server to ensure confidentiality.

Personal chatbot powered by Ollama, an open source large language model Lllama3 and OpenWebUI interface - a HOSTKEY solution built on officially free and open-source software.

Ollama is an open-source project that serves as a powerful and user-friendly platform for running LLMs on your local machine. Ollama is licensed under the MIT License.

Lllama3 is Meta's latest open-source large language model that has been scaled up to 70 billion parameters, making it one of the largest and most powerful language models in the world.

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. OpenWebUI is licensed under the MIT License.

We guarantee that our servers are running safe and original software.

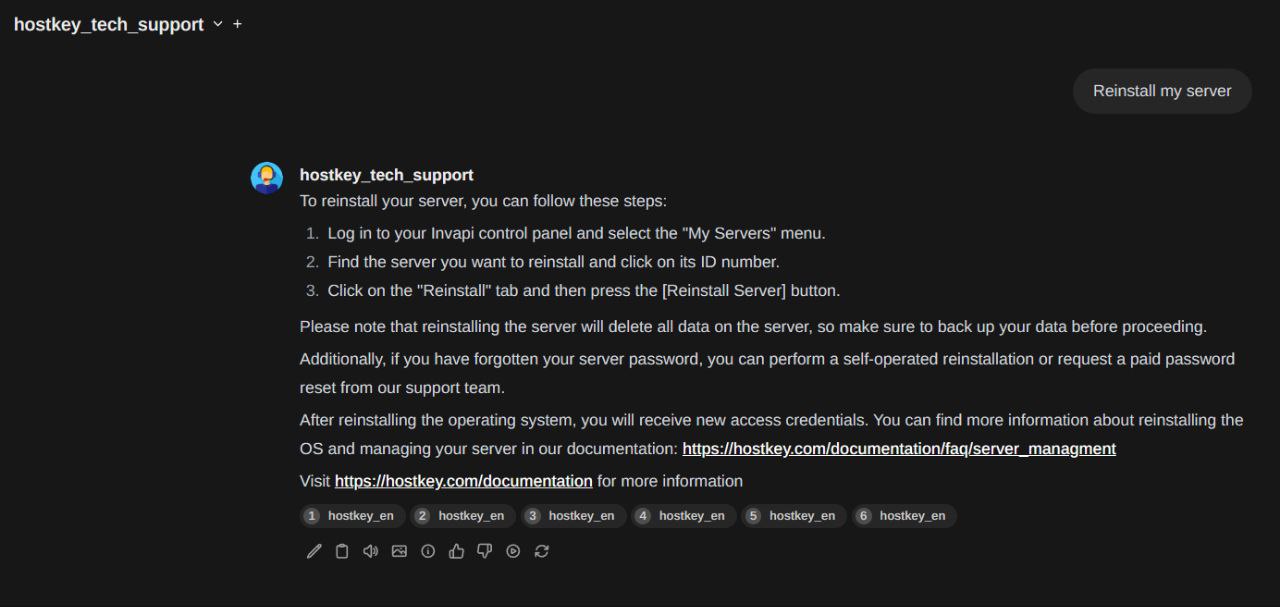

To install AI Сhatbot, you need to select it in the "App Marketplace" tab (AI and Machine Learning group) while ordering a server on the HOSTKEY website. Our auto-deployment system will install the software on your server.

“Self-hosted AI Chatbot” runs on your personal server rented at HOSTKEY. It provides full chatbot functionality with no limits on the number of users or prompt requests.

“AI Chatbot Lite” is a paid trial tier of the main product, which runs on a shared HOSTKEY-managed GPU server. AI Chatbot Lite offers basic chatbot functionality with no limits on the number of users or prompt requests, making it a perfect trial plan before purchasing the Self-hosted AI Chatbot (main product).

The payment for AI Chatbot Lite covers the use of a shared HOSTKEY-managed GPU server, meaning you don’t need to rent a server to use the software, which is free by itself.

You just need to order a new one and cancel the previous one. Also you can use both of them simultaneously as they operate independently from each other.

Yes, with the “AI-chatbot Lite” plan, you can interact with the chatbot using API requests. To set it up, follow these instructions:

https://docs.openwebui.com/getting-started/advanced-topics/api-endpoints

https://github.com/ollama/ollama/blob/main/docs/api.md

The RAG (Retrieval Augmented Generation) feature allows the chatbot to generate responses based on specific data, such as your documentation. Unfortunately, this feature is not available on the “AI Chatbot Lite” trial plan.

To access this feature, we recommend upgrading to the “AI Chatbot on your own server” plan.

Learn more about adding documents to the knowledge base (RAG):

https://hostkey.com/documentation/marketplace/machine_learning/ai_chatbot/#adding-documents-to-the-knowledge-base-rag

The "AI Chatbot Lite" plan offers 2 models pre-installed by default: gemma2:latest 9.2B and llama3:latest 8.0B. You can use them either separately or simultaneously. The "AI Chatbot Lite" plan does not allow you to install or delete models.

The "AI Chatbot on Your Own Server" plan also includes 2 pre-installed models by default: gemma2:latest 9.2B and llama3:latest 8.0B. However, with this plan, you can install and delete any models available in the Ollama library

A self-hosted AI Chatbot has a number of advantages compared to popular paid services:

Get self-hosted and ready-to-use Self-hosted AI Chatbot on servers in the Netherlands, Finland, Germany and Iceland.