Self-hosted AI Chatbot¶

In this article

- Key Features

- Deployment Features

- Getting Started with Your Deployed AI Chatbot

- OpenWebUI Initial Screen

- Configuring Your OpenWebUI Workspace

- Adding and Removing Models

- Adding Documents to the Knowledge Base (RAG)

- Tips for Working with RAG in OpenWebUI

- Chatbot Update

- Practical Articles and Tips

- Ordering a Server with AI Chatbot via API

Information

Self-hosted AI Chatbot constitutes a localized solution, amalgamating numerous open-source components. The nucleus of this system is Ollama - an architectural framework designed for launching and managing large-scale language models (LLMs) on local computational resources. It facilitates the downloading and deployment of selected LLMs. For seamless interaction with the deployed model, Open Web UI employs a graphical interface; this web application enables users to dispatch textual inquiries and receive responses generated by the language models. The integration of these components engenders a fully autonomous, localized solution for deploying cutting-edge language models with open-source codebases while maintain daggers full control over data integrity and system performance.

Key Features¶

- Web Interface: Open Web UI provides an intuitive web interface that centralizes control and extends interaction capabilities with local AI language models from the Ollama repository, significantly simplifying model usage for users of varying proficiency levels.

- Integration with Numerous Language Models: Ollama grants access to a plethora of free language models, thereby providing enhanced natural language processing (NLP) capabilities at your disposal. Additionally, you may integrate your customized models.

- Tasks: Users can engage in conversations, acquire answers to queries, analyze data sets, perform translations, and develop their own chatbots or AI-powered applications with the assistance of LLMs.

- Open Source Code: Ollama is an open-source project, enabling users to tailor and modify the platform according to their specific requirements.

- Web Scraper and Internal Document Search (RAG): Through OpenWebUI, you can search across various document types such as text files, PDFs, PowerPoint presentations, websites, and YouTube videos.

Note

For more information on Ollama's main settings and Open WebUI documentation, refer to Ollama developer documentation and Open WebUI documentation.

Deployment Features¶

| ID | Compatible OS | VM | BM | VGPU | GPU | Min CPU (Cores) | Min RAM (Gb) | Min HDD/SDD (Gb) | Active |

|---|---|---|---|---|---|---|---|---|---|

| 117 | Ubuntu 22.04 | - | - | + | + | 4 | 16 | - | Yes |

- The combined installation time for the OS and server falls between 15 to 30 minutes.

- Ollama Server downloads and launches LLM in memory, streamlining deployment processes.

- Open WebUI operates as a web application that connects with the Ollama Server.

- Users engage with LLaMA 3 via the Open WebUI's web interface by sending queries to receive responses.

- All computations and data processing are executed locally on the server, ensuring privacy and control over information flow. System administrators have the flexibility to customize the LLM for bespoke tasks through the functionalities provided within OpenWebUI.

- The system requirements stipulate a minimum of 16 GB RAM to ensure optimal performance.

Upon completion of the installation process, users are required to access their server by navigating through the URL: https:ollama<Server_ID_from_Invapi>.hostkey.in.

Note

Unless otherwise specified, by default we install the latest release version of software from the developer's website or operating system repositories.

Getting Started with Your Deployed AI Chatbot¶

Upon the completion of your order and payment process, a notification will be sent to the email address provided during registration, confirming that the server is ready for operation. This communication includes the VPS IP address and login credentials necessary for connection purposes. Our company's equipment management team utilizes our control panels for servers and APIs — specifically, Invapi.

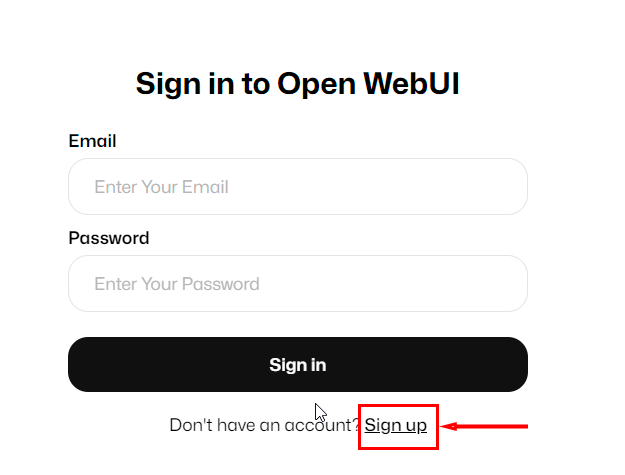

Once you click the webpanel tag link, a login window will appear.

The access details for logging into Ollama's Open WebUI web interface are as follows:

- Login URL for accessing the management panel with Open WebUI and a web interface: Via the webpanel tag. Specific address in the format

https://ollama<Server_ID_from_Invapi>.hostkey.inas indicated in the confirmation email upon handover.

Following this link, you'll need to create an identifier (username) and password within Open WebUI for user authentication purposes.

Attention

Upon the registration of the first user, the system automatically assigns them an administrator role. To ensure security and control over the registration process, all subsequent registration requests must be approved by an administrator using their account credentials.

OpenWebUI Initial Screen¶

The initial screen presents a chat interface along with several example input prompts (queries) to demonstrate the system's capabilities. To initiate interaction with the chatbot, users must select their preferred language model from available options. In this case, LLaMA 3 is recommended, which boasts extensive knowledge and capabilities for generating responses to various queries.

After selecting a model, users can enter their first query in the input field, and the system will generate a response based on the analysis of the entered text. The example prompts presented on the initial screen showcase the diversity of topics and tasks that the chatbot can handle, helping users orient themselves with its capabilities.

Configuring Your OpenWebUI Workspace¶

To further customize your chat experience, navigate to the Workspace section. Here, you'll find several options for customization:

- Models - This section allows you to fine-tune existing models for your specific needs. You can set system prompts, parameters, connect documents or knowledge bases, define tools, filters, or actions.

- Knowledge - This section configures the knowledge base (RAG) based on your documents.

- Prompts - Create, edit, and manage your own prompts (input requests) for more effective interaction with the chatbot in this section.

- Tools - Connect custom Python scripts here, which are provided to the LLM during a request. Tools allow the LLM to perform actions and obtain additional context as a result. Ready-made community tools can be viewed on the website.

- Functions - Connect modular operations here, which allow users to expand the capabilities of AI by embedding specific logic or actions directly into workflows. Unlike tools that operate as external utilities, functions execute within the OpenWebUI environment and handle tasks such as data processing, visualization, and interactive communication. Ready-made function examples can be viewed on the website.

Adding and Removing Models¶

Ollama offers the ability to install and use a wide range of language models, not just the default one. Before installing new models, ensure that your server configuration meets the requirements of the chosen model regarding memory usage and computational resources.

Installing a Model via the OpenWebUI Interface¶

To install models through the OpenWebUI interface, follow these steps:

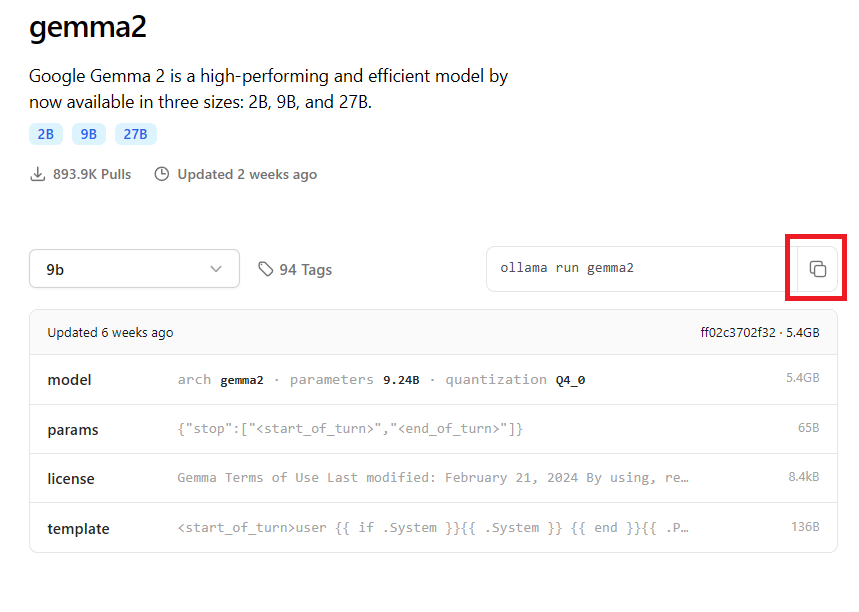

-

Select the desired model from the Ollama library and navigate to its page by clicking on its name. Choose the type and size of the model (if necessary). The most suitable "dimensionality" will be offered by default. Then, click the icon with two squares to the left of the command like

ollama run <model_name>to copy the installation string to your clipboard.

-

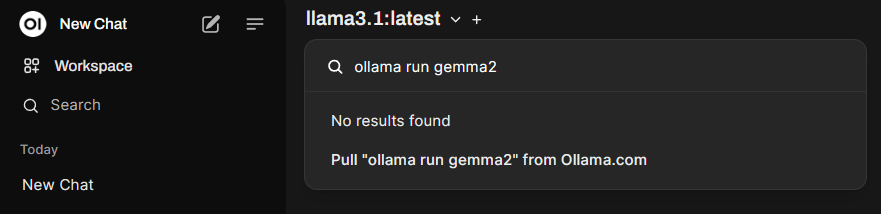

Click the model name in the top left corner of the OpenWebUI chat-bot window and paste the copied command into the Search a model field.

-

Click on string Pull "ollama run

" from Ollama.com .

-

After a successful download and installation, the model will appear in the dropdown list and become available for selection.

Installing a Model via Command Line¶

To install new models, you need to connect to your server using SSH and execute the corresponding command as described in this article.

Removing a Model¶

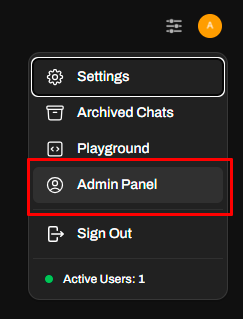

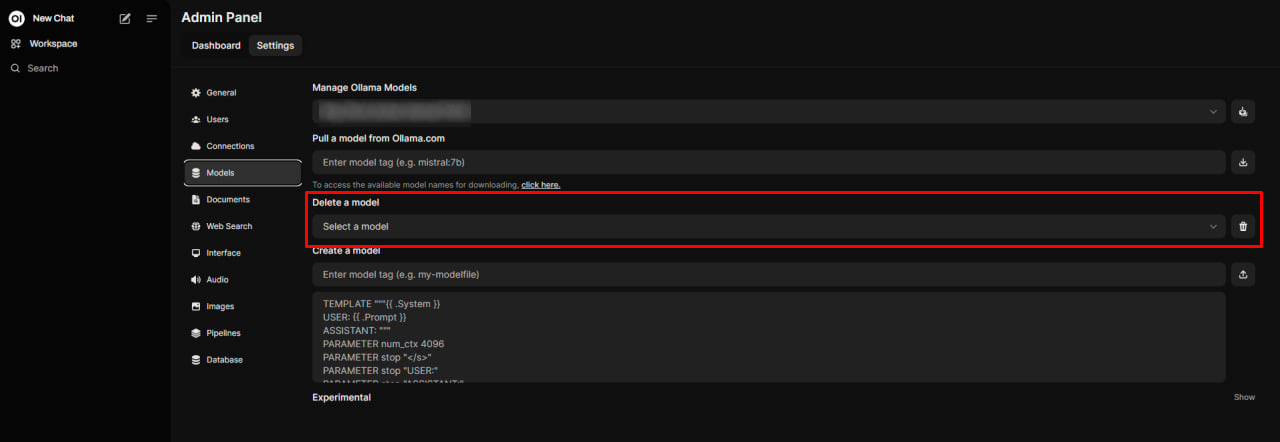

To delete models from the OpenWebUI interface, go to the web interface settings User icon in the top right corner >> Settings >> Admin Panel >> Models.

Next, click on the Manage Models button to open the model management window.

In it, from the drop-down list Delete a model, select the model to delete and click the icon next to it:

To remove a model using the command line (as root):

Adding Documents to the Knowledge Base (RAG)¶

The Knowledge option allows you to create knowledge bases (RAG) and upload documents of various formats, such as PDF, text files, Word documents, PowerPoint presentations, and others. These documents are then analyzed and can be discussed with the chatbot. This is especially helpful for studying and understanding complex documents, preparing for presentations or meetings, analyzing data and reports, checking written work for grammatical errors, style, and logic, working with legal and financial documents, as well as research in various fields. The chatbot can help you understand document content, summarize it, highlight key points, answer questions, provide additional information, and offer recommendations.

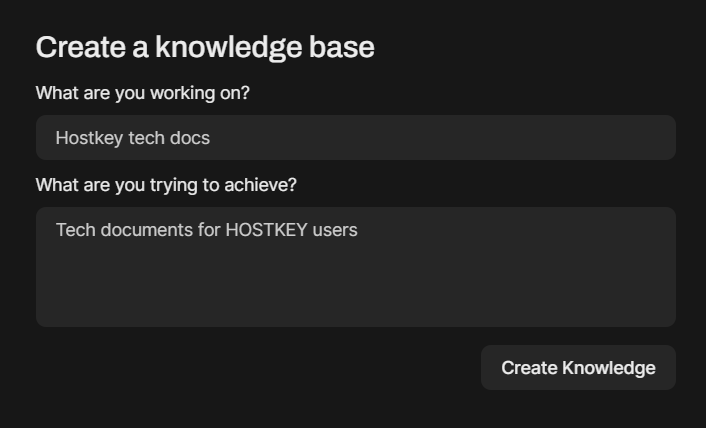

To work with documents, you need to create at least one knowledge base. To do this, go to the Workspace >> Knowledge section and click on in the upper right corner. Fill in the information about the name and content of the knowledge base and create it with the Create Knowledge button.

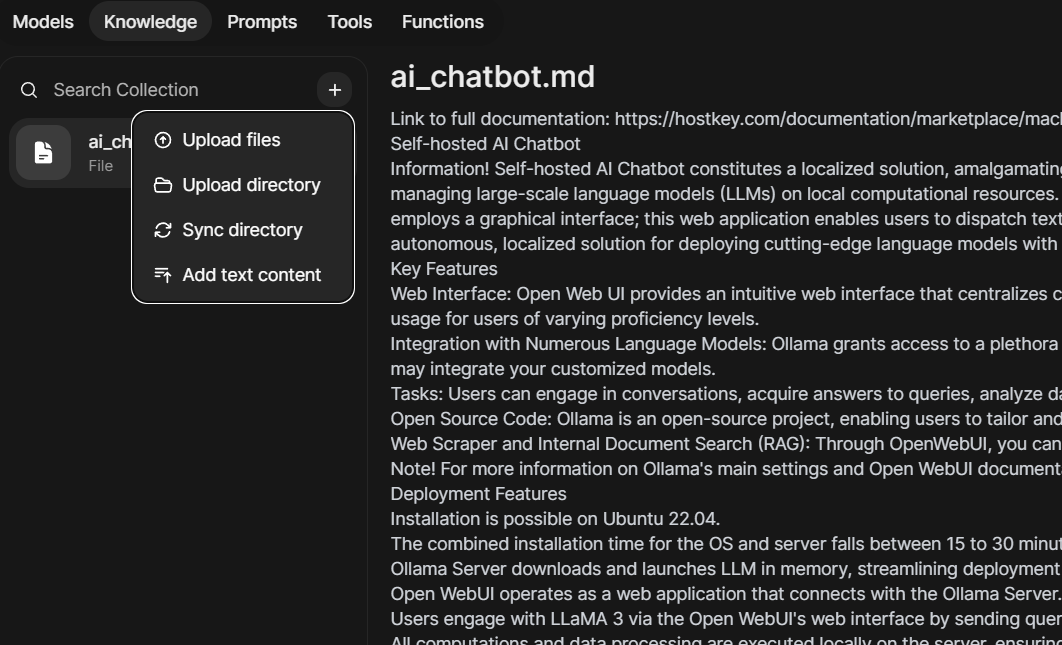

Inside the knowledge base section, on the left is a list of files uploaded to the knowledge base, and on the right is their content or where you can drag and drop files for uploading.

Also, by clicking on the button next to the Search Collection search bar, you can:

- Upload files to the knowledge base (Upload files)

- Upload an entire directory of files (Upload directory)

- Synchronize files and their content between the knowledge base and directory (Sync directory). This option allows you to update the knowledge base.

- Add content to the knowledge base through Open WebUI's built-in editor (Add text content)

You can delete any file from the knowledge base by selecting it and clicking on the cross that appears.

Attention

Depending on the chosen model and GPU power on the server, creating embeddings in document settings can take from tens of seconds to tens of minutes.

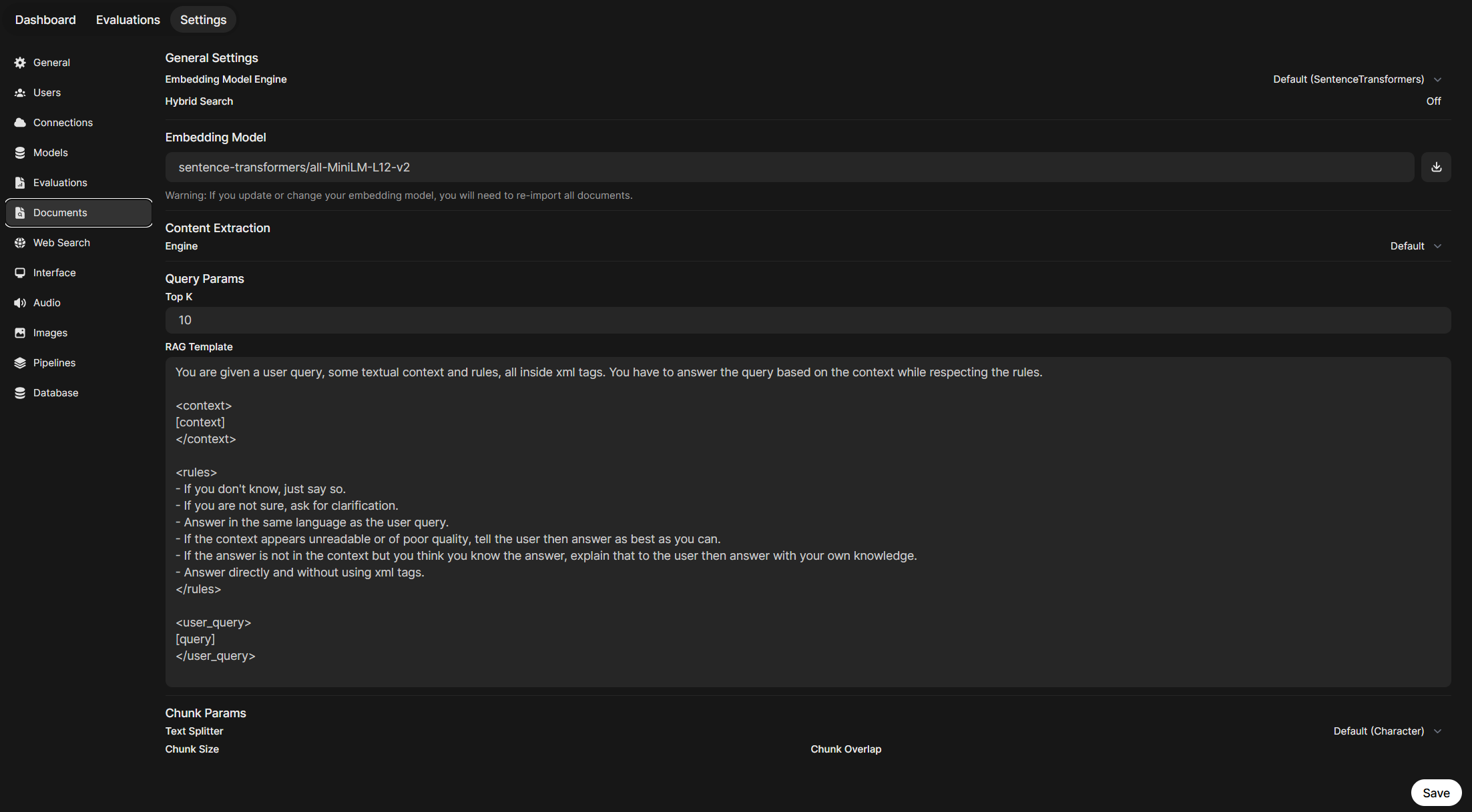

To manage document settings, go to User Name >> Settings >> Admin Settings >> Documents.

Here you can configure:

- Embedding Model Engine - The engine for creating embeddings. By default, SentenceTransformers is used, but you can upload and use embedding models from Ollama for this purpose.

- Hybrid Search - Hybrid search. When enabled, the search quality for knowledge bases and documents will improve, but the working speed may decrease significantly.

- Embeding Models - The model for embedding. We recommend setting this field to the improved model

sentence-transformers/all-MiniLM-L12-v2and clicking the upload button to the right of it. - Engine - Content extractor engine. Use the default value.

- Query Params: This section configures parameters that influence queries to uploaded documents and the way the chatbot generates responses.

- Top K - This setting determines the number of best search results that will be displayed. For example, if Top K = 5 is set, then 5 of the most relevant documents or text fragments will be shown in the response.

- RAG Template - RAG (Retrieval Augmented Generation) is a method where the system first extracts relevant parts of text from a set of documents, and then uses them to generate an answer with the help of a language model. RAG Template sets the template for forming a request to the language model when using this method. The ability to configure RAG Template allows you to adapt the query format to the language model to get higher quality answers in specific usage scenarios.

-

Chunk Params - This section allows you to configure parameters for splitting (chunking) uploaded documents. Chunking is dividing large documents into smaller parts for easier processing. Here, you can set the maximum chunk size in symbols: Chunk Size and the number of characters that parts can overlap: Chunk Overlap. Recommended values are

1500and100, respectively.In this section, you can also enable the option PDF Extract Images (OCR) - this is a technology for recognizing text on images. When enabled, the system will extract images from PDF files and apply OCR to recognize any text contained in these images.

-

Files - Here, you can set the maximum size of an uploaded file and their total number.

The buttons located below Reset Upload Directory and Reset Vector Storage/Knowledge clear the directory of uploaded document files and reset the saved vector storage/knowledge base (all). Use them only when absolutely necessary, and delete files and knowledge bases through Workspace.

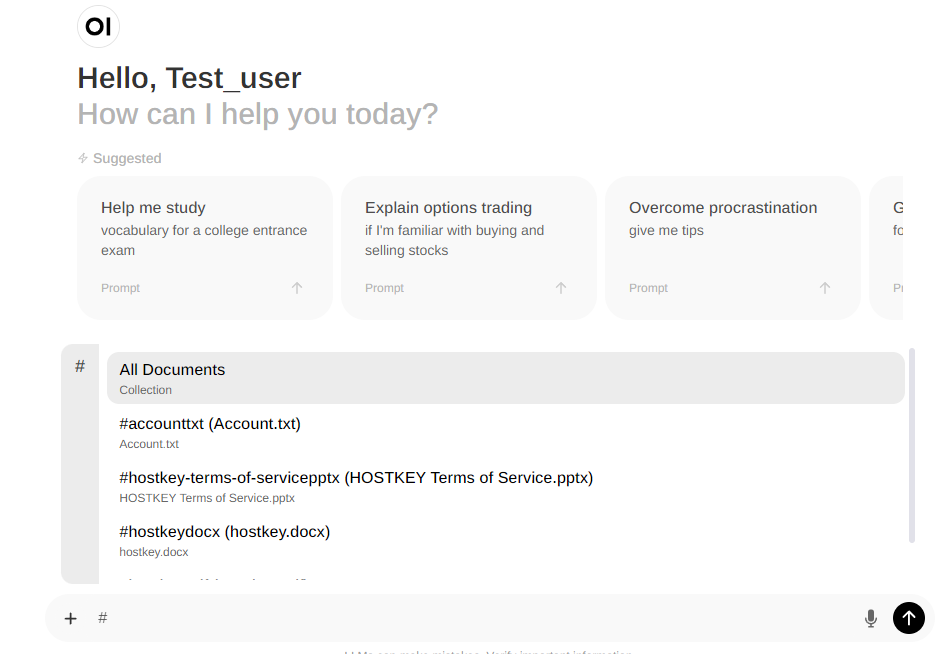

After uploading documents, you can work with them in chat mode. To do this, start a message in the chat line with the # symbol and select the desired document or knowledge base from the dropdown list. Subsequently, the response to the request will be formed based on the data from the selected document. This feature allows you to receive contextual answers based on the uploaded information and can be useful for various tasks such as searching for information, analyzing data, and making decisions based on documents:

Note

You can use the symbol # to add web sites or YouTube videos to your query, allowing LLM to search for them as well.

Tips for Working with RAG in OpenWebUI¶

- Any manipulations with the Embedding Model will require deleting and uploading documents to the vector database again. Changing RAG parameters does not require this.

- When adding or removing documents, be sure to update the custom model (if one exists) and the document collection. Otherwise, searching for them may not work correctly.

- OpenWebUI recognizes pdf, csv, rst, xml, md, epub, doc, docx, xls, xlsx, ppt, pptx, txt formats, but it is recommended to upload documents in plain text.

- Using hybrid search improves results but consumes many resources, and the response time may take 20–40 seconds even on a powerful GPU.

Chatbot Update¶

When new models become available, or to fix bugs and increase the functionality of the AI chatbot, you need to update two of its components - Ollama and Open WebUI.

Updating Ollama¶

- To update Ollama, log in to the server via SSH as root and run the following command:

- Open a browser and check the availability of Ollama through the API by entering the address

<server IP>:11434.

You should receive the message Ollama is running.

- If you don't have access to the API, you may need to add the following lines to the service file

/etc/systemd/system/ollama.servicein the[Service]section:

and restart the service with the following commands:

Updating Open WebUI¶

To update Open WebUI, log in to the server via SSH as root and run the following command:

docker run --rm --volume /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower --run-once open-webui

If updating through Watchtower doesn't work, execute the following commands:

docker stop open-webui

docker rm open-webui

docker run -d -p 8080:8080 --gpus all --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:cuda

Attention

If you want to access the built-in API documentation for endpoint of Open WebUI, you need to run the container with the ENV=dev environment variable set:

Practical Articles and Tips¶

You can find more helpful information in our blog articles:

- Let's build a customer support chatbot using RAG and your company's documentation in OpenWebUI

- 10 Tips for Open WebUI to Enhance Your Work with AI

Ordering a Server with AI Chatbot via API¶

To install this software using the API, follow these instructions.