Free and self-hosted AI Chatbot built on Ollama, Lllama3 LLM model and OpenWebUI interface.

Personal chatbot powered by Ollama, an open source large language model Lllama3 and OpenWebUI interface running on your own server.

Rent a virtual (VPS) or a dedicated server from HOSTKEY with a pre-installed and ready-to-use Self-hosted AI Chatbot, which can process your data and documents using various LLM models.

You can upload the most recent versions of Phi3, Mistral, Gemma, and Code Llama models.

Servers available in the Netherlands, Finland, Germany and Iceland.

The "Self-hosted AI Chatbot" plan includes a pre-installed model by default:

You can install or remove any models from the Ollama library. You can also run multiple models at the same time, as long as your server can handle the performance load.

An AI chatbot hosted on your own server ensures security, with all data stored and processed within your environment.

OpenWebUI allows you to use multiple models in a single workspace, speeding up workflow and enabling you to process a single prompt across several models simultaneously without switching windows. Just make one request and visually compare the results to select the best option.

Hosting an AI Chatbot on your own server allows you to conduct code reviews preventing the risk of code leakage and protecting corporate confidentiality. The scalability of a self-hosted solution is very useful for handling increased workloads – you can increase a server capacity as needed.

Connect an AI chatbot to your knowledge base to generate answers to frequently asked questions from your users or employees.

Analyze corporate documents in various formats, such as pdf, csv, rst, xml, md, epub, doc, docx, xls, xlsx, ppt, and txt, while keeping all analytics on your server to ensure confidentiality.

Personal chatbot powered by Ollama, an open source large language model Lllama3 and OpenWebUI interface - a HOSTKEY solution built on officially free and open-source software.

Ollama is an open-source project that serves as a powerful and user-friendly platform for running LLMs on your local machine. Ollama is licensed under the MIT License.

Qwen3 model family is released under the free and permissive Apache 2.0 license. This makes its weights openly available to the public for a wide range of uses.

Open WebUI is an extensible, feature-rich, and user-friendly self-hosted WebUI designed to operate entirely offline. OpenWebUI is licensed under the MIT License.

We guarantee that our servers are running safe and original software.

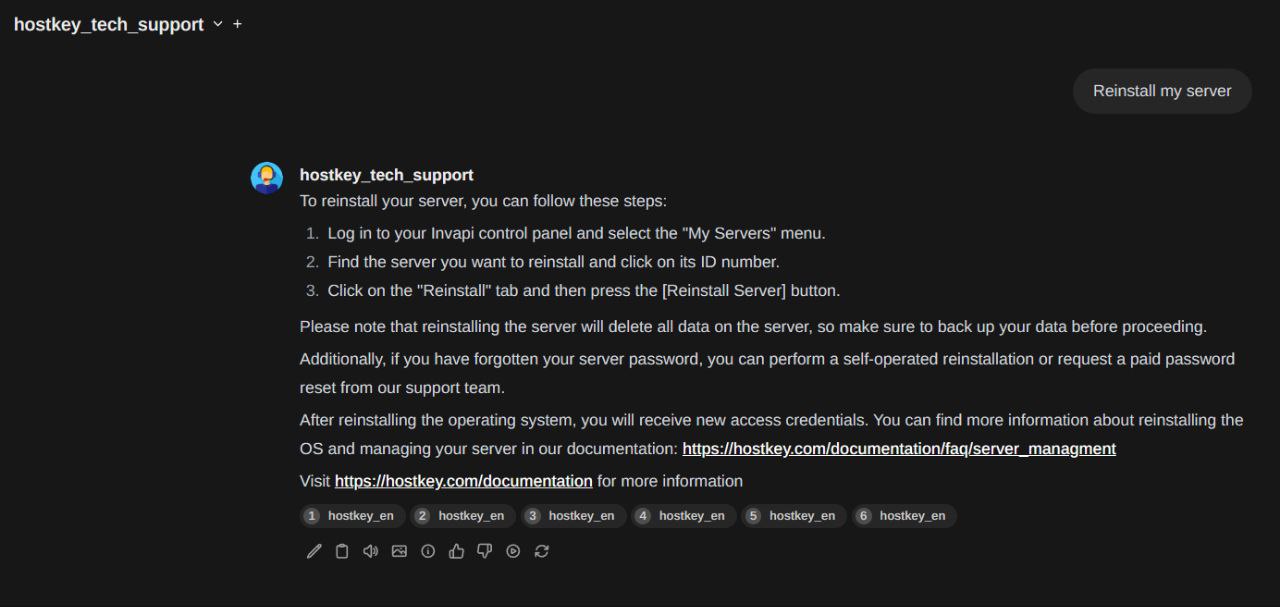

To install AI Сhatbot, you need to select it in the "App Marketplace" tab (AI and Machine Learning group) while ordering a server on the HOSTKEY website. Our auto-deployment system will install the software on your server.

The "Self-hosted AI Chatbot" plan includes a pre-installed qwen3:14b model by default. However, you can install and delete any models available in the Ollama library.

A self-hosted AI Chatbot has a number of advantages compared to popular paid services:

Picking between an AI chatbot that you host and a SaaS platform like ChatGPT or Gemini is very important when selecting an AI assistant. By using the Ollama chatbot, you’ll avoid being tied to any vendor, handle your infrastructure yourself and enjoy top-level privacy.

Although ChatGPT is a powerful tool, your data is stored and processed outside your reach. In contrast, running the Ollama chatbot on your own hardware or using an AI chatbot VPS allows you to keep all data local. It is especially important in industries that are regulated such as healthcare and finance.

Google Gemini AI depends a lot on its ecosystem. You may not always be able to see or change how things are done. Using Ollama hosting, you can modify or swap models and set up your stack as you wish, without being limited by the ecosystem.

GitHub Copilot is focused on code generation but lacks flexibility for broader AI interactions.щ With the Ollama chatbot, you can have more conversations and control how it reasons, remembers things and works with other systems.

When you run your Ollama chatbot on your own VPS or server, you gain benefits right away and in the future.

If you’re working on AI apps, the Ollama chatbot is the perfect partner for you.

If you’re working on agents, integrating vector databases or making changes to the UI, a self-hosted AI chatbot allows you to do things that cloud platforms cannot.

The system is designed to provide high performance for ollama hosting.

Latency benchmarks:

The difference between VPS and dedicated server.

Ollama chatbots are being used by many industries to make things more efficient and improve how users interact.

It is very important to have good security. When you use a self-hosted AI chatbot, you don’t have to give up anything.

It takes only a few minutes to deploy any server. You can select from free or discounted AI software that is already installed on our marketplace. Customers can choose to be billed hourly or monthly. Save up to 40% when you choose a long-term plan.

AI-Dedicated-1

AI-Dedicated-2

AI-Dedicated-Pro

AI-VPS-Lite

AI-VPS-Pro

AI-VPS-Max

All of our servers are set up and ready for you to use right away. You can get the Ollama chatbot, Docker stacks and other AI tools directly from our marketplace.

Manage your AI infrastructure entirely on your own. Sign up for Ollama hosting, run your self-hosted AI chatbot on a strong Ollama VPS or Ollama server and start in just a few minutes.