When working with streaming video, the quality and speed of playback are key. Is it possible to set up multi-stream broadcasting without buying expensive hardware? Let's see what we can do.

Problem.

High-quality video broadcasting usually incurs serious costs: you need to allocate premises and create an engineering infrastructure for it, purchase equipment and hire employees to maintain it, rent data transmission channels and generally do all sorts of support work. Depending on the scale of the project, the capital investment alone may require significant investment.

Custom and instant GPU servers equipped with professional-grade NVIDIA RTX 4000 / 5000 / A6000 cards

What alternative?

It is possible to significantly reduce capital costs and make operating costs by renting cloud servers with a GPU, and it is worth betting on hardware transcoding with Nvidia NVENC. In addition to reducing costs, it will make live streaming much easier.

So, if you are setting up a stream, you should try FFmpeg.

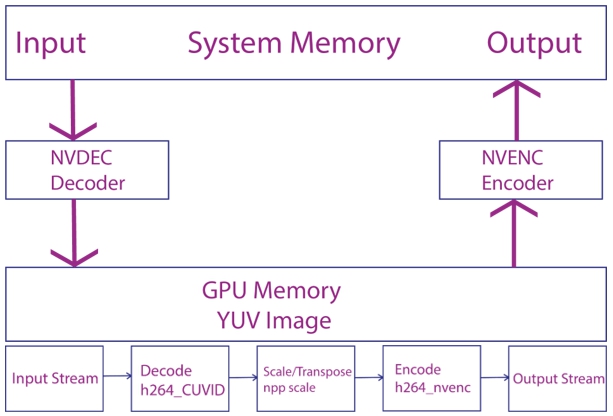

We use this free and open source set of libraries for automated video card testing. Implementing and maintaining solutions employing on this library is quite simple, and furthermore, they are distinguished by their high speed in encoding and decoding streams. This speed boost is achieved by not copying the encoded files into the system memory, but rather the encoding process is carried out using the memory of the graphics chip.

Scheme of the transcoding process using FFmpeg:

Driver patch and FFmpeg build

We will be testing in Ubuntu Linux, and we will start with gaming graphics accelerators: the GeForce GTX 1080 Ti and GeForce RTX 3090. They are not being used in real projects, but they are quite capable of demonstrating the difference between transcoding using a CPU alone versus GPUs. The manufacturer does not consider these adapters "qualified" and limits the maximum number of simultaneous NVENC video transcoding sessions. To solve this problem, you will have to use a trick and disable the restriction using a patch for the video driver posted by enthusiasts on GitHub.

The patch will not be required for professional graphics cards such as the RTX A4000 or A5000, since there is no hard limit on the number of threads embedded in their driver. A list of Nvidia graphics cards with NVENC support is available on the manufacturer’s website. The technology can be used as an NVENC SDK.

You also need to build FFmpeg with Nvidia GPU support. We haven't released it to the repository yet, so here are detailed instructions for Ubuntu (in other Linux distributions, the procedure is similar):

# Compiling for Linux

# FFmpeg with NVIDIA GPU acceleration is supported on all Linux platforms.

# To compile FFmpeg on Linux, do the following:

# Clone ffnvcodec

git clone https://git.videolan.org/git/ffmpeg/nv-codec-headers.git

# Install ffnvcodec

cd nv-codec-headers && sudo make install && cd –

# Clone FFmpeg's public GIT repository.

git clone https://git.ffmpeg.org/ffmpeg.git ffmpeg/

# Install necessary packages.

sudo apt-get install build-essential yasm cmake libtool libc6 libc6-dev unzip wget libnuma1 libnuma-dev

# Configure

./configure --enable-nonfree --enable-cuda-nvcc --enable-libnpp --extra-cflags=-I/usr/local/cuda/include --extra-ldflags=-L/usr/local/cuda/lib64 --disable-static --enable-shared

# Compile

make -j 8

# Install the libraries.

sudo make install

Stream Settings

To stream video using FFmpeg, we need ffserver. Let’s edit the ffserver.conf file (the standard path to it is: /etc/ffserver.conf).

Example of ffserver configurations for streaming:

# Port that the server will use.

HTTPPort 8090

# Address, at which the server will work (0.0.0.0 — all available addresses).

HTTPBindAddress 0.0.0.0

# Maximum throughput per client in kb/s (up to 100000).

MaxClients 1000

RTSPPort 5454

RTSPBindAddress 0.0.0.0

<Stream name>

Format rtp

File /root/file.name.mp4

ACL allow 0.0.0.0

#VideoCodec libx264

#VideoSize 1920X1080

</Stream>

Example of the command to start streaming:

ffserver ffmpeg bbb_sunflower_1080p_30fps_normal.mp4 http://ip/feed.ffm

Video streaming decoding example using GPU and NVENC decoder

(connecting to a streaming video and saving it to a device):

ffmpeg -i rtsp://ip:5454/nier -c:v h264_nvenc Output-File.mp4

Sample output from nvidia-smi confirms that FFmpeg is using a GPU: 0 N/A N/A 27564 C ffmpeg 152MiB.

Testing

We conducted comparative testing of transcoding of Full HD (1080p) live streams in high profile H.264 on consumer video cards that had not undergone special training. The operation of the GeForce RTX 3090 was tested without removing the restrictions on the number of threads, as well as with a patched driver (for the GTX 1080 Ti, testing without a patch seemed redundant to us). One of the Blender demo files was chosen as the source video — bbb_sunflower_1080p_30fps_normal.mp4.

To test the signal, an input stream with the following parameters was used:

| Video compression | ?H.264 |

| Resolution | 1920 x 1080 (in pixels) |

| Frame rate | 30 fps |

| Video bitrate | 2,996 Mbit/s |

| Audio compression | AAC |

| Audio frequency | 48 kHz |

| No. of audio channels | Stereo |

| Audio bitrate | 479 kbit/s |

| Video compression | H.264 |

| Resolution | 1920 x 1080 (in pixels) |

| Frame rate | 30 fps |

| Video bitrate | 2,996 Mbit/s |

| Audio compression | AAC |

| Audio frequency | 48 kHz |

| No. of audio channels | Stereo |

| Audio bitrate | 479 kbit/s |

Full HD (1080p) is one of the most common live video streaming resolutions and allows for intensive computational loads during testing.

Description of test conditions:

| CPU Test | GeForce GTX 1080 Ti | GeForce RTX 3090 | |

|---|---|---|---|

| CPU | 4 x VPS Core | 4 x VPS Core | 1 x Xeon E3-1230v6 3.5GHz (4 cores) |

| RAM | 1 x VPS RAM 16Gb | 1 x VPS RAM 16Gb | 2 x 16 Gb DDR4 |

| HDD | 1 x VPS HDD 240 Gb | 1 x VPS HDD 240 Gb | 1 x 512Gb SSD 1 x 120Gb SSD |

| Other hardware | 1 x VGPU 1080Ti | 1 x VGPU 1080Ti | 1 x RTX 3090 |

| CPU Test | |

| CPU | 4 x VPS Core |

| RAM | 1 x VPS RAM 16Gb |

| HDD | 1 x VPS HDD 240 Gb |

| Other hardware | 1 x VGPU 1080Ti |

| GeForce GTX 1080Ti | |

| CPU | 4 x VPS Core |

| RAM | 1 x VPS RAM 16Gb |

| HDD | 1 x VPS HDD 240 Gb |

| Other hardware | 1 x VGPU 1080Ti |

| GeForce RTX 3090 | |

| CPU | 1 x Xeon E3-1230v6 3.5GHz (4 cores) |

| RAM | 2 x 16 Gb DDR4 |

| HDD | 1 x 512Gb SSD 1 x 120Gb SSD |

| Other hardware | 1 x RTX 3090 |

When testing, we registered the following loads:

| Fan | Temp | Perf | Pwr:Usage/Cap | Memory-Usage | |

|---|---|---|---|---|---|

| GeForce GTX 1080 Ti | 59% | 82C | P2 | 86W / 250W | 5493MiB/11178MiB |

| GeForce RTX 3090 | 43% | 51C | P2 | 149W / 350W | 22806MiB /24267MiB |

| GeForce GTX 1080Ti | |

| Fan | 59% |

| Temp | 82C |

| Perf | P2 |

| Pwr:Usage/Cap | 86W / 250W |

| Memory-Usage | 5493MiB/11178MiB |

| GeForce RTX 3090 | |

| Fan | 43% |

| Temp | 51C |

| Perf | P2 |

| Pwr:Usage/Cap | 149W / 350W |

| Memory-Usage | 22806MiB /24267MiB |

Custom and instant GPU servers equipped with professional-grade NVIDIA RTX 4000 / 5000 / A6000 cards

The CPU Test without using a GPU was successful, but it loaded the server to the maximum: all the computational cores and all available memory were involved, and at the output we got only a few threads. The high load on the processor precludes the effective use of this method for organizing a real broadcast due to the risk of critical errors and failures. The CPU alone is not suitable for a large number of parallel operations.

When testing the GPU, one stream was fed to the input of the decoder, and the transcoded streams were distributed at the output via an rstp protocol. Note that the GeForce RTX 3090 without a driver patch only mastered three streams. When we tried to process more, we got errors:

[h264_nvenc @ 0x55ddbdd3ef80] OpenEncodeSessionEx failed: out of memory (10): (no details) [h264_nvenc @ 0x55ddbdd3ef80] No capable devices found Error initializing output stream 0:0 -- Error while opening encoder for output stream #0:0 - maybe incorrect parameters such as bit_rate, rate, width or height

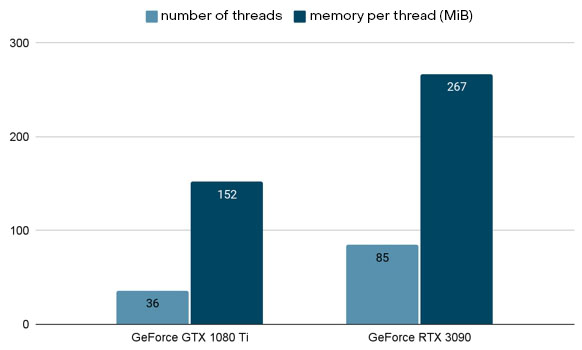

The number of threads after applying the patch to the video driver and the amount of memory used are shown in the diagram:

The number of threads processed by each card is limited by both the amount of GPU memory and RAM. The GeForce RTX 3090 modifications differ in the amount of video memory, but they process the same number of threads, which is determined by the test assembly - 32 GB of RAM. Below is an example of the output of data for the RAM from a test bench using a GeForce RTX 3090 video card:

| Total | Used | Free | Shared | Buff | Cache available | |

|---|---|---|---|---|---|---|

| Mem | 31 G | 11 G | 234 M | 1,3 G | 19 G | 18 G |

| Swap | 4,0 G | 1,0 M | 4,0 G |

| Mem | |

| Total | 31 G |

| Used | 11 G |

| Free | 234 M |

| Shared | 1,3 G |

| Buff | 19 G |

| Cache available | 18 G |

| Swap | |

| Total | 31 G |

| Used | 11 G |

| Free | 234 M |

Conclusions

Testing on consumer video adapters requires rough intervention in the system software, but even this shows that servers with GPUs allow you to transcode live streams using heavy loads.

That is, it is quite possible to choose FFmpeg for high-quality broadcasting without buying commercial software and expensive workstations. For example, as a budget option for video surveillance tasks and saving streams from several dozen cameras to files: you can take a machine with one GeForce GTX 1080 Ti and write the streams from it to the NAS yourself.

The solution also allows for broadcast scaling, as it does not require significant time and computing power to change the number of streams.

Of course, outside your office, site or on the territory of the data center, due to the Nvidia licensing rules, you won’t be able to use gaming cards, and there’s no need: there are professional product lines for this. We will talk about experiments with them in the next part of the article.