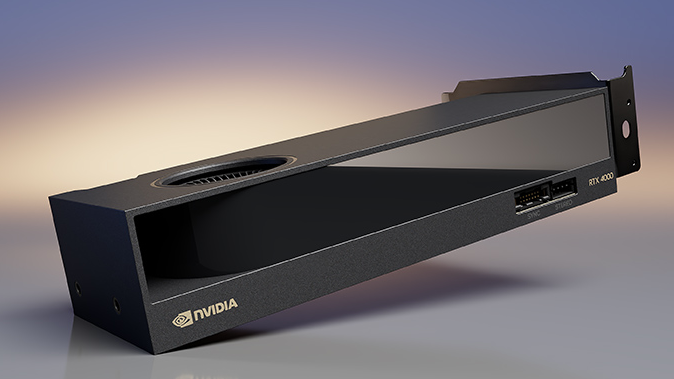

In April, NVIDIA launched a new product, the RTX A4000 ADA, a small form factor GPU designed for workstation applications. This processor replaces the A2000 and can be used for complex tasks, including scientific research and engineering calculations and data visualization.

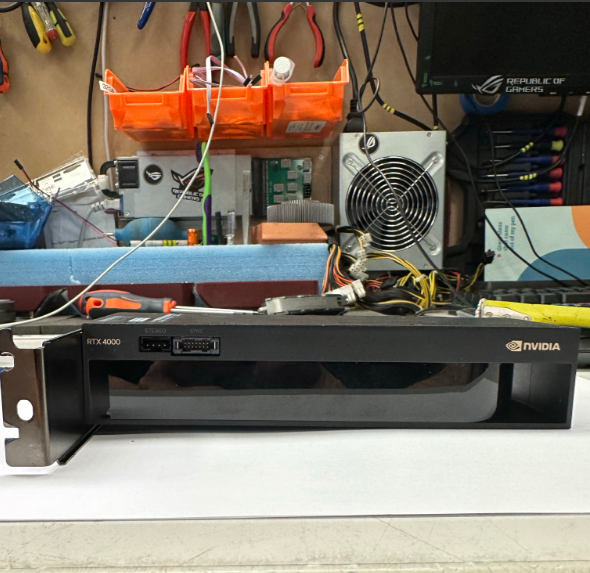

The RTX A4000 ADA features 6,144 CUDA cores, 192 Tensor and 48 RT cores, and 20GB GDDR6 ECC VRAM. One of the key benefits of the new GPU is its power efficiency: the RTX A4000 ADA consumes only 70W, which lowers both power costs and system heat. The GPU also allows you to drive multiple displays thanks to its 4x Mini-DisplayPort 1.4a connectivity.

When comparing the RTX 4000 SFF ADA GPUs to other devices in the same class, it should be noted that when running in single precision mode, it shows a performance similar to the latest generation RTX A4000 GPU, which consumes twice as much power (140W vs. 70W).

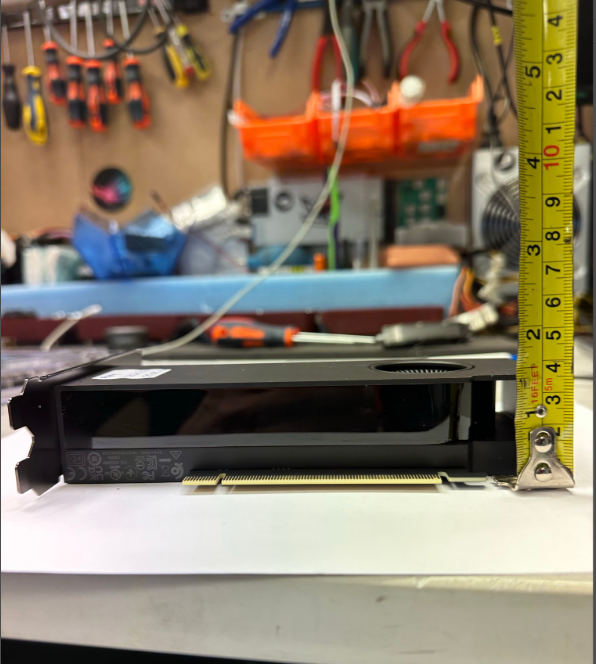

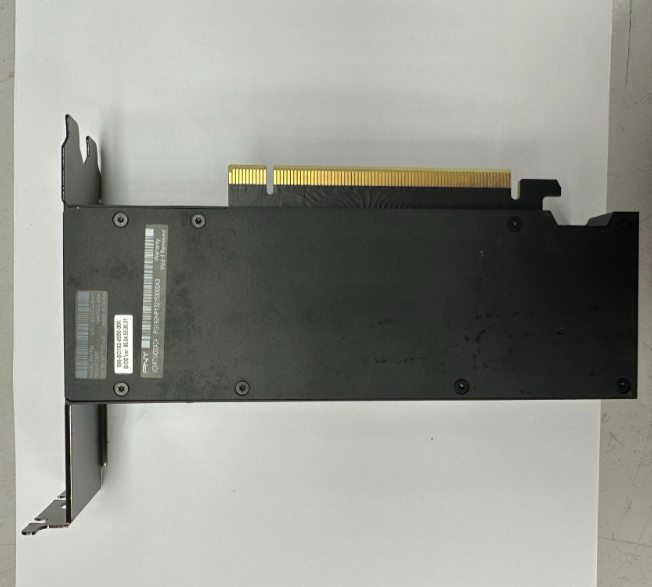

The ADA RTX 4000 SFF is built on the ADA Lovelace architecture and 5nm process technology. This enables next-generation Tensor Core and ray tracing cores, which significantly improve performance by providing faster and more efficient ray tracing and Tensor cores than the RTX A4000. In addition, ADA's RTX 4000 SFF comes in a small package - the card is 168mm long and as thick as two expansion slots.

Improved ray tracing kernels allows for efficient performance in environments where the technology is used, such as in 3D design and rendering. Furthermore, the new GPU's 20GB memory capacity enables it to handle large environments.

According to the manufacturer, fourth-generation Tensor cores deliver high AI computational performance - a twofold increase in performance over the previous generation. The new Tensor cores support FP8 acceleration. This innovative feature may work well for those developing and deploying AI models in environments such as genomics and computer vision.

It's also of note that the increase in encoding and decoding mechanisms makes the RTX 4000 SFF ADA a good solution for multimedia workloads such as video among others.

Technical specifications of NVIDIA RTX A4000 and RTX A5000 graphics cards, RTX 3090

| RTX A4000 ADA | NVIDIA RTX A4000 | NVIDIA RTX A5000 | RTX 3090 | |

| Architecture | Ada Lovelace | Ampere | Ampere | Ampere |

| Tech Process | 5 nm | 8 nm | 8 nm | 8 nm |

| GPU | AD104 | GA102 | GA104 | GA102 |

| Number of transistors (millions) | 35,800 | 17,400 | 28,300 | 28,300 |

| Memory bandwidth (Gb/s) | 280.0 | 448 | 768 | 936.2 |

| Video memory capacity (bits) | 160 | 256 | 384 | 384 |

| GPU memory (GB) | 20 | 16 | 24 | 24 |

| Memory type | GDDR6 | GDDR6 | GDDR6 | GDDR6X |

| CUDA cores | 6,144 | 6 144 | 8192 | 10496 |

| Tensor cores | 192 | 192 | 256 | 328 |

| RT cores | 48 | 48 | 64 | 82 |

| SP perf (teraflops) | 19.2 | 19,2 | 27,8 | 35,6 |

| RT core performance (teraflops) | 44.3 | 37,4 | 54,2 | 69,5 |

| Tensor performance (teraflops) | 306.8 | 153,4 | 222,2 | 285 |

| Maximum power (Watts) | 70 | 140 | 230 | 350 |

| Interface | PCIe 4.0 x 16 | PCI-E 4.0 x16 | PCI-E 4.0 x16 | PCIe 4.0 x16 |

| Connectors | 4x Mini DisplayPort 1.4a | DP 1.4 (4) | DP 1.4 (4) | DP 1.4 (4) |

| Form Factor | 2 slots | 1 slot | 2 slots | 2-3 slots |

| The vGPU software | no | no | Yes, unlimited | Yes. with limitations |

| Nvlink | no | no | 2x RTX A5000 | yes |

| CUDA support | 11.6 | 8.6 | 8.6 | 8.6 |

| VULKAN support | 1.3 | yes | yes | yes, 1.2 |

| Price (USD) | 1,250 | 1000 | 2500 | 1400 |

Description of the test environment

| RTX A4000 ADA | RTX A4000 | |

| CPU | AMD Ryzen 9 5950X 3.4GHz (16 cores) | OctaCore Intel Xeon E-2288G, 3,5 GHz |

| RAM | 4x 32 Gb DDR4 ECC SO-DIMM | 2x 32 GB DDR4-3200 ECC DDR4 SDRAM 1600 MHz |

| Drive | 1Tb NVMe SSD | Samsung SSD 980 PRO 1TB |

| Motherboard | ASRock X570D4I-2T | Asus P11C-I Series |

| Operating System | Microsoft Windows 10 | Microsoft Windows 10 |

Test results

V-Ray 5 Benchmark

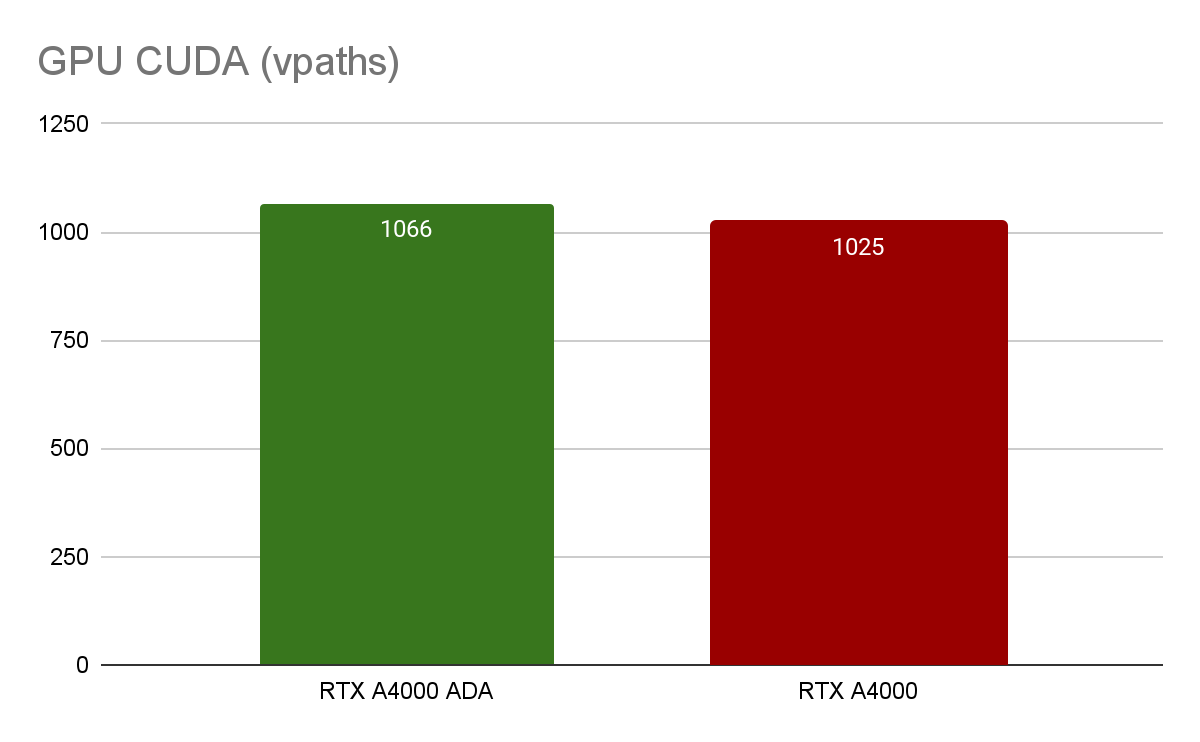

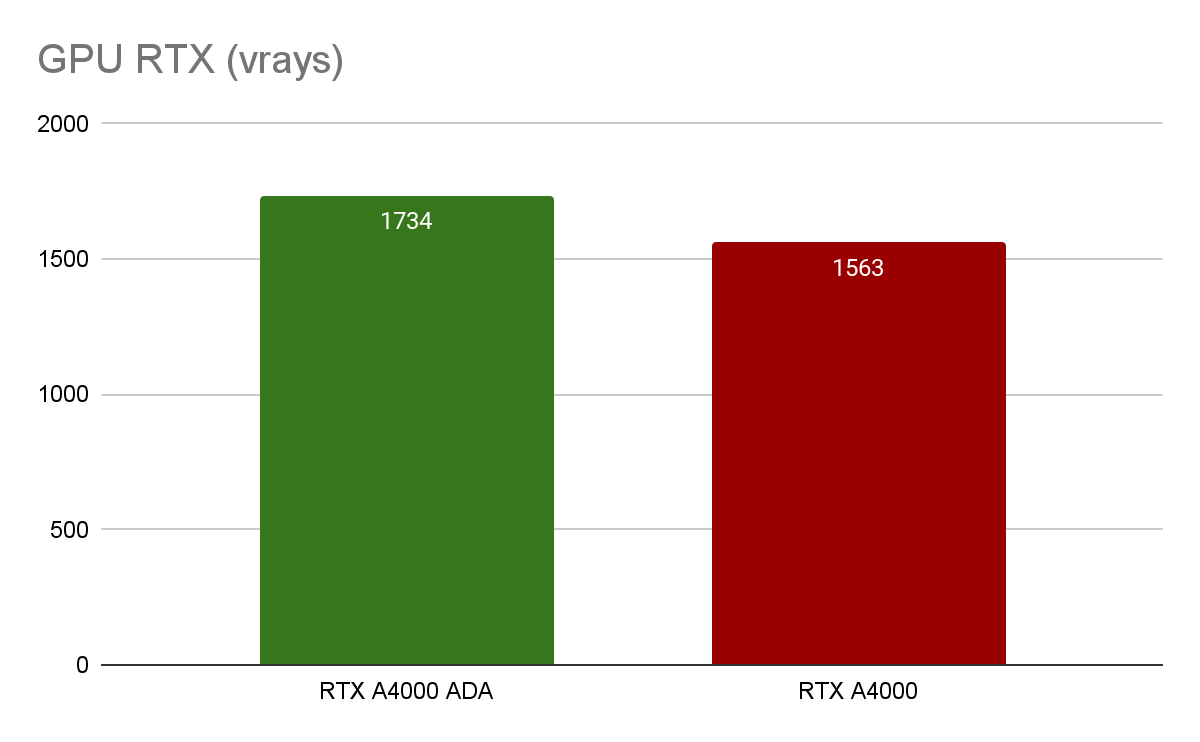

V-Ray GPU CUDA and RTX tests measure relative GPU rendering performance. The RTX A4000 GPU is slightly behind the RTX A4000 ADA (4% and 11%, respectively).

Machine Learning

"Dogs vs. Cats"

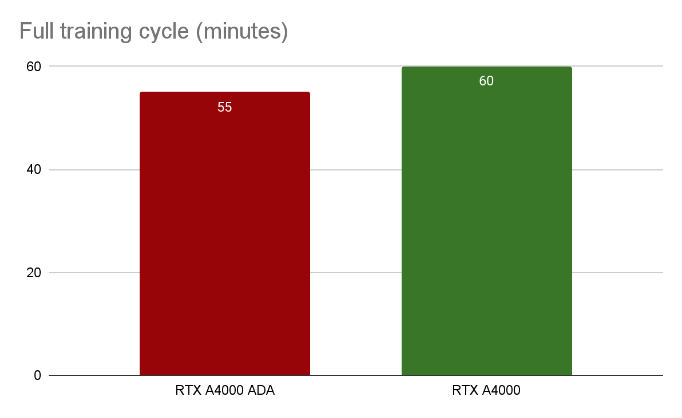

To compare the performance of GPUs for neural networks, we used the "Dogs vs. Cats" dataset - the test analyzes the content of a photo and distinguishes whether the photo shows a cat or a dog. All the necessary raw data can be found here. We ran this test on different GPUs and cloud services and got the following results:

In this test, the RTX A4000 ADA slightly outperformed the RTX A4000 by 9%, but keep in mind the small size and low power consumption of the new GPU.

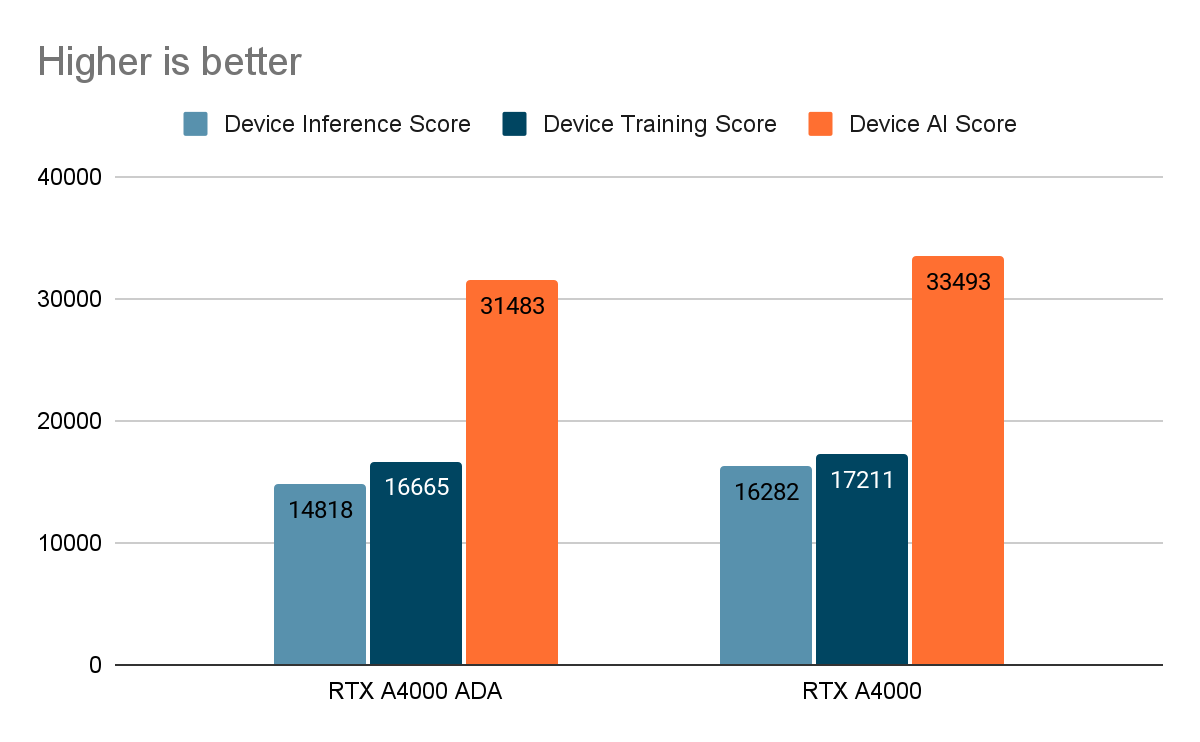

AI-Benchmark

AI-Benchmark allows you to measure the performance of the device during an AI model output task. The unit of measurement may vary according to the test, but usually it is the number of operations per second (OPS) or the number of frames per second (FPS).

| RTX A4000 | RTX A4000 ADA | |

| 1/19. MobileNet-V2 | 1.1 — inference | batch=50, size=224x224: 38.5 ± 2.4 ms1.2 — training | batch=50, size=224x224: 109 ± 4 ms | 1.1 — inference | batch=50, size=224x224: 53.5 ± 0.7 ms1.2 — training | batch=50, size=224x224: 130.1 ± 0.6 ms |

| 2/19. Inception-V3 | 2.1 — inference | batch=20, size=346x346: 36.1 ± 1.8 ms2.2 — training | batch=20, size=346x346: 137.4 ± 0.6 ms | 2.1 — inference | batch=20, size=346x346: 36.8 ± 1.1 ms2.2 — training | batch=20, size=346x346: 147.5 ± 0.8 ms |

| 3/19. Inception-V4 | 3.1 — inference | batch=10, size=346x346: 34.0 ± 0.9 ms3.2 — training | batch=10, size=346x346: 139.4 ± 1.0 ms | 3.1 — inference | batch=10, size=346x346: 33.0 ± 0.8 ms3.2 — training | batch=10, size=346x346: 135.7 ± 0.9 ms |

| 4/19. Inception-ResNet-V2 | 4.1 — inference | batch=10, size=346x346: 45.7 ± 0.6 ms4.2 — training | batch=8, size=346x346: 153.4 ± 0.8 ms | 4.1 — inference batch=10, size=346x346: 33.6 ± 0.7 ms4.2 — training batch=8, size=346x346: 132 ± 1 ms |

| 5/19. ResNet-V2-50 | 5.1 — inference | batch=10, size=346x346: 25.3 ± 0.5 ms5.2 — training | batch=10, size=346x346: 91.1 ± 0.8 ms | 5.1 — inference | batch=10, size=346x346: 26.1 ± 0.5 ms5.2 — training | batch=10, size=346x346: 92.3 ± 0.6 ms |

| 6/19. ResNet-V2-152 | 6.1 — inference | batch=10, size=256x256: 32.4 ± 0.5 ms6.2 — training | batch=10, size=256x256: 131.4 ± 0.7 ms | 6.1 — inference | batch=10, size=256x256: 23.7 ± 0.6 ms6.2 — training | batch=10, size=256x256: 107.1 ± 0.9 ms |

| 7/19. VGG-16 | 7.1 — inference | batch=20, size=224x224: 54.9 ± 0.9 ms7.2 — training | batch=2, size=224x224: 83.6 ± 0.7 ms | 7.1 — inference | batch=20, size=224x224: 66.3 ± 0.9 ms7.2 — training | batch=2, size=224x224: 109.3 ± 0.8 ms |

| 8/19. SRCNN 9-5-5 | 8.1 — inference | batch=10, size=512x512: 51.5 ± 0.9 ms8.2 — inference | batch=1, size=1536x1536: 45.7 ± 0.9 ms8.3 — training | batch=10, size=512x512: 183 ± 1 ms | 8.1 — inference | batch=10, size=512x512: 59.9 ± 1.6 ms8.2 — inference | batch=1, size=1536x1536: 53.1 ± 0.7 ms8.3 — training | batch=10, size=512x512: 176 ± 2 ms |

| 9/19. VGG-19 Super-Res | 9.1 — inference | batch=10, size=256x256: 99.5 ± 0.8 ms9.2 — inference | batch=1, size=1024x1024: 162 ± 1 ms9.3 — training | batch=10, size=224x224: 204 ± 2 ms | |

| 10/19. ResNet-SRGAN | 10.1 — inference | batch=10, size=512x512: 85.8 ± 0.6 ms10.2 — inference | batch=1, size=1536x1536: 82.4 ± 1.9 ms10.3 — training | batch=5, size=512x512: 133 ± 1 ms | 10.1 — inference | batch=10, size=512x512: 98.9 ± 0.8 ms10.2 — inference | batch=1, size=1536x1536: 86.1 ± 0.6 ms10.3 — training | batch=5, size=512x512: 130.9 ± 0.6 ms |

| 11/19. ResNet-DPED | 11.1 — inference | batch=10, size=256x256: 114.9 ± 0.6 ms11.2 — inference | batch=1, size=1024x1024: 182 ± 2 ms11.3 — training | batch=15, size=128x128: 178.1 ± 0.8 ms | 11.1 — inference | batch=10, size=256x256: 146.4 ± 0.5 ms11.2 — inference | batch=1, size=1024x1024: 234.3 ± 0.5 ms11.3 — training | batch=15, size=128x128: 234.7 ± 0.6 ms |

| 12/19. U-Net | 12.1 — inference | batch=4, size=512x512: 180.8 ± 0.7 ms12.2 — inference | batch=1, size=1024x1024: 177.0 ± 0.4 ms12.3 — training | batch=4, size=256x256: 198.6 ± 0.5 ms | 12.1 — inference | batch=4, size=512x512: 222.9 ± 0.5 ms12.2 — inference | batch=1, size=1024x1024: 220.4 ± 0.6 ms12.3 — training | batch=4, size=256x256: 229.1 ± 0.7 ms |

| 13/19. Nvidia-SPADE | 13.1 — inference | batch=5, size=128x128: 54.5 ± 0.5 ms13.2 — training | batch=1, size=128x128: 103.6 ± 0.6 ms | 13.1 — inference | batch=5, size=128x128: 59.6 ± 0.6 ms13.2 — training | batch=1, size=128x128: 94.6 ± 0.6 ms |

| 14/19. ICNet | 14.1 — inference | batch=5, size=1024x1536: 126.3 ± 0.8 ms14.2 — training | batch=10, size=1024x1536: 426 ± 9 ms | 14.1 — inference | batch=5, size=1024x1536: 144 ± 4 ms14.2 — training | batch=10, size=1024x1536: 475 ± 17 ms |

| 15/19. PSPNet | 15.1 — inference | batch=5, size=720x720: 249 ± 12 ms15.2 — training | batch=1, size=512x512: 104.6 ± 0.6 ms | 15.1 — inference | batch=5, size=720x720: 291.4 ± 0.5 ms15.2 — training | batch=1, size=512x512: 99.8 ± 0.9 ms |

| 16/19. DeepLab | 16.1 — inference | batch=2, size=512x512: 71.7 ± 0.6 ms16.2 — training | batch=1, size=384x384: 84.9 ± 0.5 ms | 16.1 — inference | batch=2, size=512x512: 71.5 ± 0.7 ms16.2 — training | batch=1, size=384x384: 69.4 ± 0.6 ms |

| 17/19. Pixel-RNN | 17.1 — inference | batch=50, size=64x64: 299 ± 14 ms17.2 — training | batch=10, size=64x64: 1258 ± 64 ms | 17.1 — inference | batch=50, size=64x64: 321 ± 30 ms17.2 — training | batch=10, size=64x64: 1278 ± 74 ms |

| 18/19. LSTM-Sentiment | 18.1 — inference | batch=100, size=1024x300: 395 ± 11 ms18.2 — training | batch=10, size=1024x300: 676 ± 15 ms | 18.1 — inference | batch=100, size=1024x300: 345 ± 10 ms18.2 — training | batch=10, size=1024x300: 774 ± 17 ms |

| 19/19. GNMT-Translation | 19.1 — inference | batch=1, size=1x20: 119 ± 2 ms | 19.1 — inference | batch=1, size=1x20: 156 ± 1 ms |

The results of this test show that the performance of the RTX A4000 is 6% higher than RTX A4000 ADA, however, with the caveat that the test results may vary depending on the specific task and operating conditions employed.

PyTorch

RTX A 4000

| Benchmarking | Model average train time (ms) |

| Training double precision type mnasnet0_5 | 62.995805740356445 |

| Training double precision type mnasnet0_75 | 98.39066505432129 |

| Training double precision type mnasnet1_0 | 126.60405158996582 |

| Training double precision type mnasnet1_3 | 186.89460277557373 |

| Training double precision type resnet18 | 428.08079719543457 |

| Training double precision type resnet34 | 883.5790348052979 |

| Training double precision type resnet50 | 1016.3950300216675 |

| Training double precision type resnet101 | 1927.2308254241943 |

| Training double precision type resnet152 | 2815.663013458252 |

| Training double precision type resnext50_32x4d | 1075.4373741149902 |

| Training double precision type resnext101_32x8d | 4050.0641918182373 |

| Training double precision type wide_resnet50_2 | 2615.9953451156616 |

| Training double precision type wide_resnet101_2 | 5218.524832725525 |

| Training double precision type densenet121 | 751.9759511947632 |

| Training double precision type densenet169 | 910.3225564956665 |

| Training double precision type densenet201 | 1163.036551475525 |

| Training double precision type densenet161 | 2141.505298614502 |

| Training double precision type squeezenet1_0 | 203.14435005187988 |

| Training double precision type squeezenet1_1 | 98.04857730865479 |

| Training double precision type vgg11 | 1697.710485458374 |

| Training double precision type vgg11_bn | 1729.2972660064697 |

| Training double precision type vgg13 | 2491.615080833435 |

| Training double precision type vgg13_bn | 2545.1631927490234 |

| Training double precision type vgg16 | 3371.1953449249268 |

| Training double precision type vgg16_bn | 3423.8639068603516 |

| Training double precision type vgg19_bn | 4314.5153522491455 |

| Training double precision type vgg19 | 4249.422650337219 |

| Training double precision type mobilenet_v3_large | 105.54619789123535 |

| Training double precision type mobilenet_v3_small | 37.6680850982666 |

| Training double precision type shufflenet_v2_x0_5 | 26.51611328125 |

| Training double precision type shufflenet_v2_x1_0 | 61.260504722595215 |

| Training double precision type shufflenet_v2_x1_5 | 105.30067920684814 |

| Training double precision type shufflenet_v2_x2_0 | 181.03694438934326 |

| Inference double precision type mnasnet0_5 | 17.397074699401855 |

| Inference double precision type mnasnet0_75 | 28.902697563171387 |

| Inference double precision type mnasnet1_0 | 38.387718200683594 |

| Inference double precision type mnasnet1_3 | 58.228821754455566 |

| Inference double precision type resnet18 | 147.95727252960205 |

| Inference double precision type resnet34 | 293.519492149353 |

| Inference double precision type resnet50 | 336.44991874694824 |

| Inference double precision type resnet101 | 637.9982376098633 |

| Inference double precision type resnet152 | 948.9351654052734 |

| Inference double precision type resnext50_32x4d | 372.80876636505127 |

| Inference double precision type resnext101_32x8d | 1385.1624917984009 |

| Inference double precision type wide_resnet50_2 | 873.048791885376 |

| Inference double precision type wide_resnet101_2 | 1729.2765426635742 |

| Inference double precision type densenet121 | 270.13323307037354 |

| Inference double precision type densenet169 | 327.1932888031006 |

| Inference double precision type densenet201 | 414.733362197876 |

| Inference double precision type densenet161 | 766.3542318344116 |

| Inference double precision type squeezenet1_0 | 74.86292839050293 |

| Inference double precision type squeezenet1_1 | 34.04905319213867 |

| Inference double precision type vgg11 | 576.3767147064209 |

| Inference double precision type vgg11_bn | 580.5839586257935 |

| Inference double precision type vgg13 | 853.4365510940552 |

| Inference double precision type vgg13_bn | 860.3136301040649 |

| Inference double precision type vgg16 | 1145.091052055359 |

| Inference double precision type vgg16_bn | 1152.8028392791748 |

| Inference double precision type vgg19_bn | 1444.9562692642212 |

| Inference double precision type vgg19 | 1437.0987701416016 |

| Inference double precision type mobilenet_v3_large | 30.876317024230957 |

| Inference double precision type mobilenet_v3_small | 11.234536170959473 |

| Inference double precision type shufflenet_v2_x0_5 | 7.425284385681152 |

| Inference double precision type shufflenet_v2_x1_0 | 18.25782299041748 |

| Inference double precision type shufflenet_v2_x1_5 | 33.34946632385254 |

| Inference double precision type shufflenet_v2_x2_0 | 57.84676551818848 |

RTX A4000 ADA

| Benchmarking | Model average train time |

| Training half precision type mnasnet0_5 | 20.266618728637695 |

| Training half precision type mnasnet0_75 | 21.445374488830566 |

| Training half precision type mnasnet1_0 | 26.714019775390625 |

| Training half precision type mnasnet1_3 | 26.5126371383667 |

| Training half precision type resnet18 | 19.624991416931152 |

| Training half precision type resnet34 | 32.46446132659912 |

| Training half precision type resnet50 | 57.17473030090332 |

| Training half precision type resnet101 | 98.20127010345459 |

| Training half precision type resnet152 | 138.18389415740967 |

| Training half precision type resnext50_32x4d | 75.56005001068115 |

| Training half precision type resnext101_32x8d | 228.8706636428833 |

| Training half precision type wide_resnet50_2 | 113.76442432403564 |

| Training half precision type wide_resnet101_2 | 204.17311191558838 |

| Training half precision type densenet121 | 68.97401332855225 |

| Training half precision type densenet169 | 85.16453742980957 |

| Training half precision type densenet201 | 103.299241065979 |

| Training half precision type densenet161 | 137.54578113555908 |

| Training half precision type squeezenet1_0 | 16.71830177307129 |

| Training half precision type squeezenet1_1 | 12.906527519226074 |

| Training half precision type vgg11 | 51.7004919052124 |

| Training half precision type vgg11_bn | 57.63327598571777 |

| Training half precision type vgg13 | 86.10869407653809 |

| Training half precision type vgg13_bn | 95.86676120758057 |

| Training half precision type vgg16 | 102.91589260101318 |

| Training half precision type vgg16_bn | 113.74778270721436 |

| Training half precision type vgg19_bn | 131.56734943389893 |

| Training half precision type vgg19 | 119.70191955566406 |

| Training half precision type mobilenet_v3_large | 31.30636692047119 |

| Training half precision type mobilenet_v3_small | 19.44464683532715 |

| Training half precision type shufflenet_v2_x0_5 | 13.710575103759766 |

| Training half precision type shufflenet_v2_x1_0 | 23.608479499816895 |

| Training half precision type shufflenet_v2_x1_5 | 26.793746948242188 |

| Training half precision type shufflenet_v2_x2_0 | 24.550962448120117 |

| Inference half precision type mnasnet0_5 | 4.418272972106934 |

| Inference half precision type mnasnet0_75 | 4.021778106689453 |

| Inference half precision type mnasnet1_0 | 4.42598819732666 |

| Inference half precision type mnasnet1_3 | 4.618926048278809 |

| Inference half precision type resnet18 | 5.803341865539551 |

| Inference half precision type resnet34 | 9.756693840026855 |

| Inference half precision type resnet50 | 15.873079299926758 |

| Inference half precision type resnet101 | 28.268003463745117 |

| Inference half precision type resnet152 | 40.04594326019287 |

| Inference half precision type resnext50_32x4d | 19.53421115875244 |

| Inference half precision type resnext101_32x8d | 62.44826316833496 |

| Inference half precision type wide_resnet50_2 | 33.533992767333984 |

| Inference half precision type wide_resnet101_2 | 59.60897445678711 |

| Inference half precision type densenet121 | 18.052735328674316 |

| Inference half precision type densenet169 | 21.956982612609863 |

| Inference half precision type densenet201 | 27.85182476043701 |

| Inference half precision type densenet161 | 37.41891860961914 |

| Inference half precision type squeezenet1_0 | 4.391803741455078 |

| Inference half precision type squeezenet1_1 | 2.4281740188598633 |

| Inference half precision type vgg11 | 17.11493968963623 |

| Inference half precision type vgg11_bn | 18.40585231781006 |

| Inference half precision type vgg13 | 28.438148498535156 |

| Inference half precision type vgg13_bn | 30.672597885131836 |

| Inference half precision type vgg16 | 34.43562984466553 |

| Inference half precision type vgg16_bn | 36.92122936248779 |

| Inference half precision type vgg19_bn | 43.144264221191406 |

| Inference half precision type vgg19 | 40.5385684967041 |

| Inference half precision type mobilenet_v3_large | 5.350713729858398 |

| Inference half precision type mobilenet_v3_small | 4.016985893249512 |

| Inference half precision type shufflenet_v2_x0_5 | 5.079126358032227 |

| Inference half precision type shufflenet_v2_x1_0 | 5.593156814575195 |

| Inference half precision type shufflenet_v2_x1_5 | 5.649552345275879 |

| Inference half precision type shufflenet_v2_x2_0 | 5.355663299560547 |

| Training double precision type mnasnet0_5 | 50.2386999130249 |

| Training double precision type mnasnet0_75 | 80.66896915435791 |

| Training double precision type mnasnet1_0 | 103.32422733306885 |

| Training double precision type mnasnet1_3 | 154.6230697631836 |

| Training double precision type resnet18 | 337.94031620025635 |

| Training double precision type resnet34 | 677.7706575393677 |

| Training double precision type resnet50 | 789.9243211746216 |

| Training double precision type resnet101 | 1484.3351316452026 |

| Training double precision type resnet152 | 2170.570478439331 |

| Training double precision type resnext50_32x4d | 877.3719882965088 |

| Training double precision type resnext101_32x8d | 3652.4944639205933 |

| Training double precision type wide_resnet50_2 | 2154.612874984741 |

| Training double precision type wide_resnet101_2 | 4176.522083282471 |

| Training double precision type densenet121 | 607.8699731826782 |

| Training double precision type densenet169 | 744.6409797668457 |

| Training double precision type densenet201 | 962.677731513977 |

| Training double precision type densenet161 | 1759.772515296936 |

| Training double precision type squeezenet1_0 | 164.3690824508667 |

| Training double precision type squeezenet1_1 | 78.70647430419922 |

| Training double precision type vgg11 | 1362.6095294952393 |

| Training double precision type vgg11_bn | 1387.2539138793945 |

| Training double precision type vgg13 | 2006.0230445861816 |

| Training double precision type vgg13_bn | 2047.526364326477 |

| Training double precision type vgg16 | 2702.2086429595947 |

| Training double precision type vgg16_bn | 2747.241234779358 |

| Training double precision type vgg19_bn | 3447.1724700927734 |

| Training double precision type vgg19 | 3397.990345954895 |

| Training double precision type mobilenet_v3_large | 84.65698719024658 |

| Training double precision type mobilenet_v3_small | 29.816465377807617 |

| Training double precision type shufflenet_v2_x0_5 | 27.401342391967773 |

| Training double precision type shufflenet_v2_x1_0 | 48.322744369506836 |

| Training double precision type shufflenet_v2_x1_5 | 82.22103118896484 |

| Training double precision type shufflenet_v2_x2_0 | 141.7021369934082 |

| Inference double precision type mnasnet0_5 | 12.988653182983398 |

| Inference double precision type mnasnet0_75 | 22.422199249267578 |

| Inference double precision type mnasnet1_0 | 30.056486129760742 |

| Inference double precision type mnasnet1_3 | 46.953935623168945 |

| Inference double precision type resnet18 | 118.04479122161865 |

| Inference double precision type resnet34 | 231.52336597442627 |

| Inference double precision type resnet50 | 268.63497734069824 |

| Inference double precision type resnet101 | 495.2010440826416 |

| Inference double precision type resnet152 | 726.4922094345093 |

| Inference double precision type resnext50_32x4d | 291.47679328918457 |

| Inference double precision type resnext101_32x8d | 1055.10901927948 |

| Inference double precision type wide_resnet50_2 | 690.6917667388916 |

| Inference double precision type wide_resnet101_2 | 1347.5529861450195 |

| Inference double precision type densenet121 | 224.35829639434814 |

| Inference double precision type densenet169 | 268.9145278930664 |

| Inference double precision type densenet201 | 343.1972026824951 |

| Inference double precision type densenet161 | 635.866231918335 |

| Inference double precision type squeezenet1_0 | 61.92759037017822 |

| Inference double precision type squeezenet1_1 | 27.009410858154297 |

| Inference double precision type vgg11 | 462.3375129699707 |

| Inference double precision type vgg11_bn | 468.4495782852173 |

| Inference double precision type vgg13 | 692.8219032287598 |

| Inference double precision type vgg13_bn | 703.3538103103638 |

| Inference double precision type vgg16 | 924.4353818893433 |

| Inference double precision type vgg16_bn | 936.5075063705444 |

| Inference double precision type vgg19_bn | 1169.098300933838 |

| Inference double precision type vgg19 | 1156.3771772384644 |

| Inference double precision type mobilenet_v3_large | 24.2356014251709 |

| Inference double precision type mobilenet_v3_small | 8.85490894317627 |

| Inference double precision type shufflenet_v2_x0_5 | 6.360034942626953 |

| Inference double precision type shufflenet_v2_x1_0 | 14.301743507385254 |

| Inference double precision type shufflenet_v2_x1_5 | 24.863481521606445 |

| Inference double precision type shufflenet_v2_x2_0 | 43.8505744934082 |

Conclusion

The new graphics card has proven to be an effective solution for a number of work tasks. Thanks to its compact size, it is ideal for powerful SFF (Small Form Factor) computers. Also, it is notable that the 6,144 CUDA cores and 20GB of memory with a 160-bit bus makes this card one of the most productive on the market. Furthermore, a low TDP of 70W helps to reduce power consumption costs. Four Mini-DisplayPort ports allow the card to be used with multiple monitors or as a multi-channel graphics solution.

The RTX 4000 SFF ADA represents a significant advance over previous generations, delivering performance equivalent to a card with twice the power consumption. With no PCIe power connector, the RTX 4000 SFF ADA is easy to integrate into low-power workstations without sacrificing high performance.