Rent high-performance H100 GPU servers and A100 equipped with the latest professional Nvidia Tesla graphic cards. These H100 servers are ideal for demanding applications such as AI acceleration, processing large datasets, and tackling complex high-performance computing (HPC) tasks. Experience unmatched speed and efficiency for your advanced computing needs.

Rent Nvidia Tesla GPU A100 and H100 Servers from €1.53/hr

Order a GPU server with pre-installed software and get a ready-to-use environment in minutes.

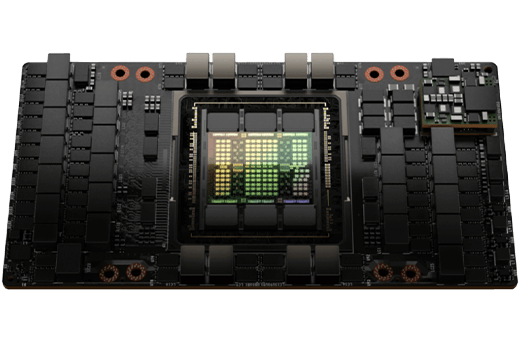

NVIDIA H100, powered by the new Hopper architecture, is a masterpiece of a GPU that delivers powerful AI acceleration, big data processing, and high-performance computing (HPC).

The Hopper architecture introduces fourth-generation tensor cores that are up to nine times faster as compared to their predecessors, resulting in enhanced performance across a wide range of machine learning and deep learning tasks.

With 80GB of high-speed HBM2e memory, this GPU can handle large language models (LLS) or AI tasks with ease.

The Nvidia A100 80GB GPU card is a data center accelerator that is designed to accelerate AI training and inference, as well as high-performance computing (HPC) applications.

The A100 80GB GPU also has the largest memory capacity and fastest memory bandwidth of any GPU on the market. This makes it ideal for training and deploying the largest AI models, as well as for accelerating HPC applications that require large datasets.

H100 GPU is the most powerful accelerator ever built, with up to 4X faster performance for AI training and 7X faster performance for HPC applications than the previous generation.

H100’s architecture is supercharged for the largest workloads, from large language models to scientific computing applications. It is also highly scalable, supporting up to 18 NVLink interconnections for high-bandwidth communication between GPUs.

H100 GPU is designed for enterprise use, with features such as support for PCIe Gen5, NDR Quantum-2 InfiniBand networking, and NVIDIA Magnum IO software for efficient scalability.

These cores are specifically designed to accelerate AI workloads, and they offer up to 2X faster performance for FP8 precision than the previous generation.

This feature allows the A100 GPU to skip over unused portions of a matrix, which can improve performance by up to 2X for certain AI workloads.

This feature allows the A100 GPU to be partitioned into up to seven smaller GPU instances, which can be used to accelerate multiple workloads simultaneously.

Here are some benchmarks for the Nvidia A100 80GB and H100 GPUs compared to other GPUs for AI and HPC:

AI Benchmarks |

||||

| Benchmark | A100 80GB | H100 | A40 | V100 |

| ResNet-50 Inference (Images/s) | 13 128 | 24 576 | 6 756 | 3 391 |

| BERT Large Training (Steps/s) | 1 123 | 2 231 | 561 | 279 |

| GPT-3 Training (Tokens/s) | 175B | 400B | 87.5B | 43.75B |

HPC Benchmarks |

||||

| Benchmark | A100 80GB | H100 | A40 | V100 |

| HPL DP (TFLOPS) | 40 | 90 | 20 | 10 |

| HPCG (GFLOPS) | 45 | 100 | 22.5 | 11.25 |

| LAMMPS (Atoms/day) | 115T | 250T | 57.5T | 28.75T |

Nvidia H100 GPU is considerably faster than the A100 GPU in both AI and HPC benchmarks. It is also faster than other GPUs such as the A40 and V100. But Nvidia A100 GPU comes at a lower price and could be more cost efficient for significant AI and HPC tasks.

| H100 for PCIe-Based Servers | A100 80GB PCIe | |

| FP64 | 26 teraFLOPS | 9.7 TFLOPS |

| FP64 Tensor Core | 51 teraFLOPS | 19.5 TFLOPS |

| FP32 | 51 teraFLOPS | 19.5 TFLOPS |

| TF32 Tensor Core | 756 teraFLOPS | 156 TFLOPS | 312 TFLOPS |

| BFLOAT16 Tensor Core | 1,513 teraFLOPS | 312 TFLOPS | 624 TFLOPS |

| FP16 Tensor Core | 1,513 teraFLOPS | 312 TFLOPS | 624 TFLOPS |

| FP8 Tensor Core | 3,026 teraFLOPS | |

| INT8 Tensor Core | 3,026 TOPS | 624 TOPS | 1248 TOPS |

| GPU memory | 80GB | 80GB HBM2e |

| GPU memory bandwidth | 2TB/s | 1,935 GB/s |

| Decoders |

7 NVDEC 7 JPEG |

|

| Max thermal design power (TDP) | 300-350W (configurable) | 300W |

| Multi-Instance GPUs | Up to 7 MIGS @ 10GB each | Up to 7 MIGs @ 10GB |

| Form factor | PCIe dual-slot air-cooled |

PCIe Dual-slot air-cooled or single-slot liquid-cooled |

| Interconnect | NVLink: 600GB/s PCIe Gen5: 128GB/s |

NVIDIA® NVLink® Bridge for 2 GPUs: 600 GB/s PCIe Gen4: 64 GB/s |

| Server options | Partner and NVIDIA-Certified Systems with 1–8 GPUs | Partner and NVIDIA-Certified Systems™ with 1-8 GPUs |

| NVIDIA AI Enterprise | Included |

Reach out to our sales department to go over the terms and conditions before placing an order for GPU servers equipped with Nvidia Tesla H100 or A100 cards.

Our Services

GPU servers for data science

e-Commerce hosting

Finance and FinTech

Private cloud

Rendering, 3D Design and visualization

Managed colocation

GPU servers for Deep Learning

Wide range of pre-configured servers with instant delivery and sale

NVIDIA Tesla H100 is a high performance GPU for AI model training, deep learning and high performance computing applications.

The Tesla H100 has 16,896 CUDA cores, while the Tesla A100 has 6,912 CUDA cores.

The NVIDIA Tesla A100 is a dual-use GPU for enterprise deep learning and HPC that can handle the same amount of workload for training, inference, and scaling in an unattended compute environment.

Depending on the workload and configuration, with the Tesla H100, you get 25% or so higher FPS compared to the A100.

Nvidia A100 has 40GB or 80GB of memory and the H100 has 80GB but much faster memory

H100 delivers the best performance for AI training and HPC over the A100; and A100 provides a balanced solution for mixed AI workloads.

Nvidia H100 server has the following advantages:

If you need maximum performance for complex AI tasks such as training GPT models or scientific simulations, then rent H100 is the best choice. NVIDIA H100 server offers significant speed and power efficiency gains over A100, especially when working with large amounts of data.

However, if your budget is limited or you are working with less demanding tasks, renting a server on A100 may be more profitable. A100 is still a powerful solution, especially for inference and medium-sized machine learning models.

Yes, many providers allow you to customize the configuration when renting H100 or A100 servers. You can choose the number of GPUs, the amount of RAM, the type of storage (SSD/NVMe) and even install the necessary software for your tasks.

However, the level of customization depends on the hosting provider. Some offer flexible plans with the ability to add resources on demand, while others only provide pre-installed NVIDIA H100 server configurations. It is better to clarify the terms before renting.

If you're looking to rent a H100 server for deep learning, simulation, or high-throughput data processing, the H100 offers industry-leading performance. In terms of AI acceleration GPU server solutions, hardware selection is equally important. All GPU rentals are of state-of-the-art performance for AI, deep learning, and high-performance computing (HPC). Nvidia Tesla H100 and Tesla A100 are perfect for Artificial Intelligence and HPC. These GPUs are designed for hard computational workloads, making them suitable for businesses renting GPU servers.

These GPUs are geared towards power and flexibility, which means efficiency. They offer top-of-the-line performance in the H100 and A100 GPUs to supercharge your workloads, from faster AI model training to accelerating data processing.

If you need a big AI or high performance computing GPU, the Nvidia H100 80GB GPU is a powerhouse. H100 GPU is designed to accelerate the training and inference of complex AI models, allowing researchers and large enterprises to gain access to next level machine learning insights sooner. The Nvidia Tesla H100 delivers an unmatched performance boost for large scale data processing, simulation and complex scientific workload. Rent h100 GPU for this tasks.

Key Features of H100 GPU:

The A100 GPU rent is a game changer for businesses that need efficient and powerful GPU solutions. Nvidia Tesla A100 presents impressive power for AI inference by allowing models to spit out accurate outputs almost instantly. The A100 rent can also be used for enterprise level computation and simulation tasks giving them reliability and performance at scale.

Key Benefits of A100 GPU:

Choosing between a Nvidia H100 server and an A100 comes down to your workload priorities: H100s are purpose-built for raw compute power.

In terms of H100 and A100 GPUs, you have to know which use cases the latest GPUs serve. The H100 GPU rental is the high throughput data analytics champion and the A100 is a great fit for a broad variety of AI and machine learning use cases. The decision to go for any of these GPUs is fundamentally based on what the workload truly requires.

Key Comparisons:

Both the H100 GPU and A100 GPU provide exceptional performance for AI, but each has unique strengths:

For data processing and HPC, the Nvidia Tesla H100 is the top card due to its higher memory and faster data transfer. For simulation, predictive analytics and scientific computing, it is especially useful. On the other hand, the Tesla A100 optimizes performance and offers a balance between both inference and data analytics requirements.

We offer flexible and competitive pricing plans for renting Nvidia Tesla H100 and A100 servers:

Entry Plan

Performance Plan

Ideal for those who need to rent H100 servers for large-scale AI training or high-performance computing workloads.

Save up to 12% on long-term rentals.

Nvidia Tesla H100 and A100 GPUs rental excel in a wide range of applications:

Deep Learning: Training image recognition or natural language processing from data on such a scale.

HPC Tasks:

Enterprise AI:

Data Science: To quickly provide business insights.