Artificial intelligence, machine learning and deep learning are an integral part of the modern age, where business and science are finding a way to transform big data into actionable information. In this article, you will learn several things about deep learning: What is the deep learning process? What machines and mechanisms implement the deep learning process? How do these things work, and what are their benefits? What is the relationship between hardware and software in the field of deep learning? What are the best practices and solutions for implementing the deep learning process? Read on to find out.

What is a GPU server?

A GPU server is a machine for working with certain data. It has a modern CPU, fast RAM and a high-performance GPU. All these parts are connected by hardware logic and data buses. Why is such a server called a GPU server? Because it usually has a powerful GPU installed. The advantages of a GPU include the large amount of memory on board with high bandwidth, and high-speed CUDA cores and tensor cores. Basically, a GPU in a GPU server is a computer within a computer. It can perform specific calculations at a very high speed..

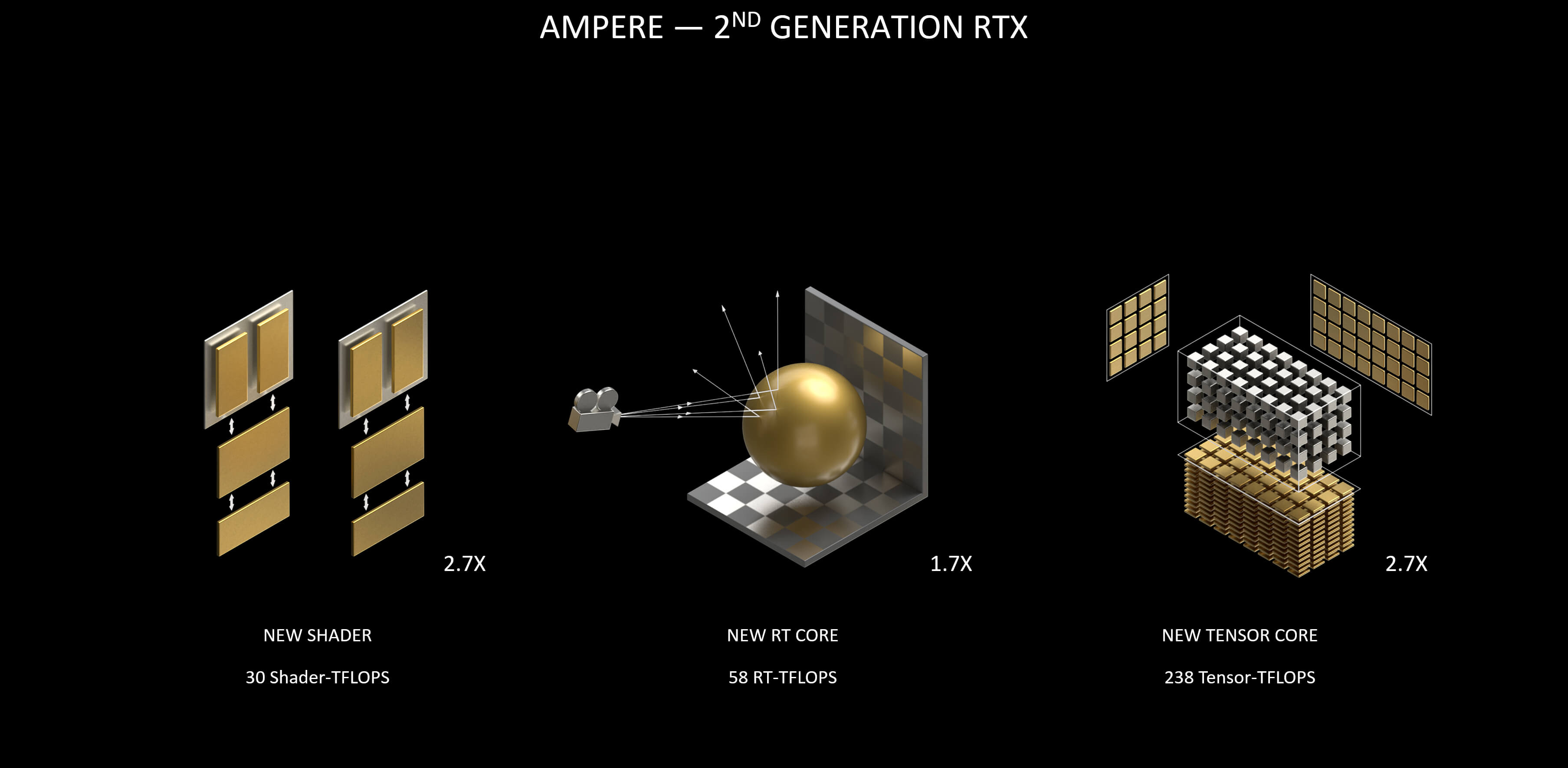

The NVIDIA RTX 3090 GPU architecture is called Ampere and contains the above components. Image

What is Deep Learning?

A modern GPU server is designed in such a way that it can perform the deep learning process very quickly. Deep learning is the practice of machine learning, which is a field of artificial intelligence that involves big data, neural networks, parallel computing, and computing a large number of matrices. All these techniques use algorithms that process large amounts of data, which are converted into useful software. Deep learning automates high-performance computing and solves artificial intelligence problems.

How Does a GPU Work?

A modern GPU is a powerful computing unit in a computer, which, like a CPU, can be used for special calculations. A powerful GPU includes a large number of tensor cores, a large amount of memory, a memory cache with high bandwidth, and special-purpose computing units such as CUDA. All these objects are interconnected at high-frequency bandwidth. A high-performance GPU is very good at specific computing tasks, such as training neural models, mathematical modeling using matrix calculations, and various work with 3D graphics. Very often, these tasks are solved using the Python programming language with the participation of specialized software. Any tasks that require parallel computing are performed well by a GPU.

What Are the Benefits of a GPU?

A mainstream GPU has a number of advantages in certain tasks. A GPU is very good at deep learning because of three factors: the first is the large number of cores designed for matrix calculations, which are important for deep learning. The second is the high memory bandwidth, which allows you to quickly work with big data. The third is the parallel calculations, which speeds up the neural network training procedure by orders of magnitude.

Why are GPUs Important in Deep Learning?

A GPU is important for deep learning tasks because it has the largest number of compute units for deep learning practices. In a deep learning server, the GPU has the largest number of cores of all the computing units. If you compare the CPU and GPU in a deep learning server, and they are the only units that can perform calculations, then the GPU wins by a huge margin for deep computing tasks. The GPU is the only computing unit in a deep learning server capable of handling the challenge of the neural network training process. The CPU is not suitable for such tasks.

How to Choose the Best GPU for Deep Learning

When thinking about choosing the best GPU for deep learning, the following factors should be considered, first among which is the power of the GPU, which is expressed in terms of the number of cores and the speed at which big data is transferred between those cores. You also need to take into account the possibility of pairing identical GPUs with each other. Multiple GPUs can work in cooperative mode, accelerating deep learning. Particular attention should be paid to licensing issues from the manufacturer and the use of related software.

Algorithm Factors Affecting GPU Use

Algorithmic factors are equally important when it comes to GPU usage. Deep learning problems are formulated and solved in such a way that the following parameters come to the fore: first is parallel problem solving, i.e., executing the learning algorithm with several computing units at the same time (in parallel). The second is efficient use of memory, large amounts of which are required for parallel processing of large data. High overall GPU performance is an important factor for achieving these goals.

Best Deep Learning Workstations

It is difficult to say which deep learning workstations are the best. The general rule is this: the best deep learning workstation should be equipped with the best GPU available today, for example, an NVIDIA GeForce RTX 3090, RTX 3080, RTX 3070, RTX A6000, RTX A5000, or RTX A4000. Ideally, there should be at least four GPUs per server, and the server should have a powerful CPU, such as an AMD Threadripper or Intel Core i9. The third element of success for deep learning workstations is a large amount of high-speed memory, up to 1 TB.

NVIDIA for Machine Learning

NVIDIA is a major vendor that offers its own solutions for deep learning tasks, such as the RTX 3090, RTX 3080, and RTX 3070. These solutions are united by the Amper GPU-chip architecture and a large number of CUDA computing units and tensor cores, high component frequencies and large amounts of high-performance memory with high bandwidth. The RTX 3090 GPU has 24 GB GDDR 6X, 10496 CUDA Cores and 328 Tensor Cores. The RTX 3080 GPU is equipped with 10 GB GDDR6X, 8704 CUDA Cores and 272 Tensor Cores. The RTX 3070 GPU includes 8 GB GDDR6, 5888 CUDA Cores and 184 Tensor Cores. Today, NVIDIA solutions are the best available on the market.

NVIDIA GPU RTX 3090, GPU RTX 3080, and GPU RTX 3070.

-

RTX 3090

-

RTX 3080

-

RTX 3070

What GPU cards are the best for deep learning: RTX 3090 / 3080, RTX A5000 / A4000?

Each of the above mentioned GPU cards has its advantages, including price, and is good for specific tasks: both Professional A-Series and 30-series of these GPUs were designed for deep learning projects.

For instance, NVIDIA's 30-series is a great option for developers, data scientists and researchers who want to get started in AI, as the NVIDIA RTX 3090 and RTX 3080 already support advanced productivity-gaining features like Tensor Cores and Unified Memory.

On the other hand, the Professional A-series cards offer additive GPU RAM. With multiple 3080s or 3090s, one’s project will be limited to the RAM of a single card (12 GB for the 3080 or 24 GB for the 3090), while the Professional A-series unleash the potential of the combined RAM of all the cards.

As for specific features of the RTX A4000, this GPU may boast 3rd gen Tensor Core, 2nd gen RT Cores, 16GB of superfast GDDR6X memory, Single Slot form factor and PCI Express Gen 4.

The RTX A5000 is expandable up to 48GB of memory using NVIDIA NVLink® to connect two GPUs.

Upon testing, the NVIDIA RTX A4000 shows near-linear scaling up to 4 GPUs, while the A5000 reveals near-linear scaling up to 8 GPUs. Thus, choosing the best GPU card for deep learning, one should be guided by their project’s needs and requirements.

Conclusion

Using a GPU for deep learning tasks is the best solution, due to the technical parameters of the parts included in the GPU. Lots of cores, lots of fast memory, that's the answer to the challenge. The GPU in a GPU server is the best at training neural networks. The CPU can't do it, and besides the CPU and GPU, there are no other computing units in a deep learning workstation. Leading vendor NVIDIA offers a series of deep learning GPUs, including the RTX 3090, RTX 3080, RTX 3070, RTX A6000, RTX A5000, and RTX A4000.

FAQ

What's the best GPU for deep learning in 2022?

Today, leading vendor NVIDIA offers the best GPUs for deep learning in 2022. The models are the RTX 3090, RTX 3080, RTX 3070, RTX A6000, RTX A5000, and RTX A4000. It’s possible to say that these are the only real solutions for deep learning in 2022.

Is a GPU good enough for deep learning?

It is difficult to say whether a single GPU is good enough for deep learning. To be honest, a set of cooperative GPUs is the single best solution for deep learning today. There are no other good options.

Why is a GPU best for neural networks?

A GPU is best for neural networks because it has tensor cores on board. Tensor cores speed up the matrix calculations needed for neural networks. Also, the large amount of fast memory in a GPU is important for neural networks. The decisive factor is the parallel computations for neural networks that the GPU provides.

Is the RTX 3080 good for deep learning?

The RTX 3080 is good for deep learning. It has a large number of CUDA cores and tensor cores, 8704 and 272 respectively. Also, large amounts of high-speed memory are crucial for deep learning. At the same time, the RTX 3080 is cheaper than the ultra-high-performance RTX 3090 and is easier to find on the market.