Updated 19/11/2021

Why do you need a Dedicated Serve

How to choose the right dedicated server?

Can I have a real example of choosing a configuration?

How to be sure of the reliability of the data center?

Choosing a dedicated server is a great responsibility. To find the right configuration, you need to decide on the hardware requirements. In this article, we will share tips on the best to approach to choose your dedicated server architecture so that it is as effective as possible for the given task.

Why do you need a Dedicated Server

A Dedicated Server is a physical machine that you have entirely at your disposal. This is how it differs from VPS / VDS (virtual servers), which share resources among themselves and other users.

Most likely, you already know about the advantages of dedicated servers, since you are already looking into one. If the question “Do I need a dedicated server, or is a VPS enough?” is still relevant to you, we recommend that you familiarize yourself with the material on whether a Dedicated Server or VPS is right for you.

So, dedicated servers give you flexible customization and isolated hardware. You will fully administer the server and can create any configuration, choose the processor, RAM, and disk space according to your specific needs. Also, you can connect specific hardware to a dedicated server - for example, a USB key or other network equipment. This will allow you to handle solutions of any complexity.

But the main advantage of dedicated servers is that you can customize the components that will provide the best performance for a specific project. Therefore, to select those components, you need to determine what exactly the server will be used for.

How to choose the right dedicated server?

The main thing to understand is what equipment requirements your project has. Developing web applications, hosting a website, backing up data and storing company documents - each task may have its own specific needs. Do you need a high-frequency processor or a lot of RAM? Let's take a look.

CPU

The processor is characterized mainly by two parameters: frequency and number of cores. In some jobs, it is the high frequency of the cores that is important, while their number does not play a significant role. Conversely, in those processes that require multithreading and parallelization of computations, it is worth using a multi-core CPU with a lower frequency.

In those jobs where a larger number of cores are useful, for example in virtualization or video encoding, you should opt for servers with multi-core Xeon Gold and Xeon Silver processors. And to accommodate large, high-load databases, processors with a higher frequency, but with a smaller number of cores, are more effective: like the Intel Xeon E-series. If the server uses licensed software (for example, Windows OS) with a rating by cores, usually a processor with the minimum number of cores, but the highest clock speed of each core is best.

If your project does not require a powerful processor, you can choose more budget options from previous generations - for example, the Intel Xeon E3 or E5 series.

RAM

The server memory can be divided according to the conditional distance from the central processor. So, the processor's cache is in the first position, RAM in the second, disk drives in the third. Their cost corresponds in more or less the same way - the closer to the processor, the more expensive. But recently, prices for RAM have become more affordable. Therefore, the more memory your server has, the better as applications can store more data closer to the processor and consequently run faster.

Bandwidth of network interfaces

ISPs typically offer 1G (1Gbps) and 10G (10Gbps) Ethernet ports. Two 1G ports are usually built into all servers, and 10G ports must be installed separately. They can be used to access the Internet and organize a local network. In the first case, 1G bandwidth will suffice, and for a local network it is better to use a higher-speed 10G port.

Disk drives

Generally speaking, the term "disk" is outdated, since only a part of modern recording devices are based on magnetic disks HDD, and SSDs are microcircuits. When choosing a disk subsystem, you should pay attention to three parameters: access time, capacity and price. They are closely related, and a any compromise must be carefully considered. For example, SATA drives are inexpensive, offer large volumes (up to tens of TB), but have high access times. SSDs, on the other hand, offer shorter access times but are more expensive per GB. It makes sense to use a couple of small SSDs for storing databases and a boot partition, and for a large amount of static content or backups, it is better to use a SATA drive.

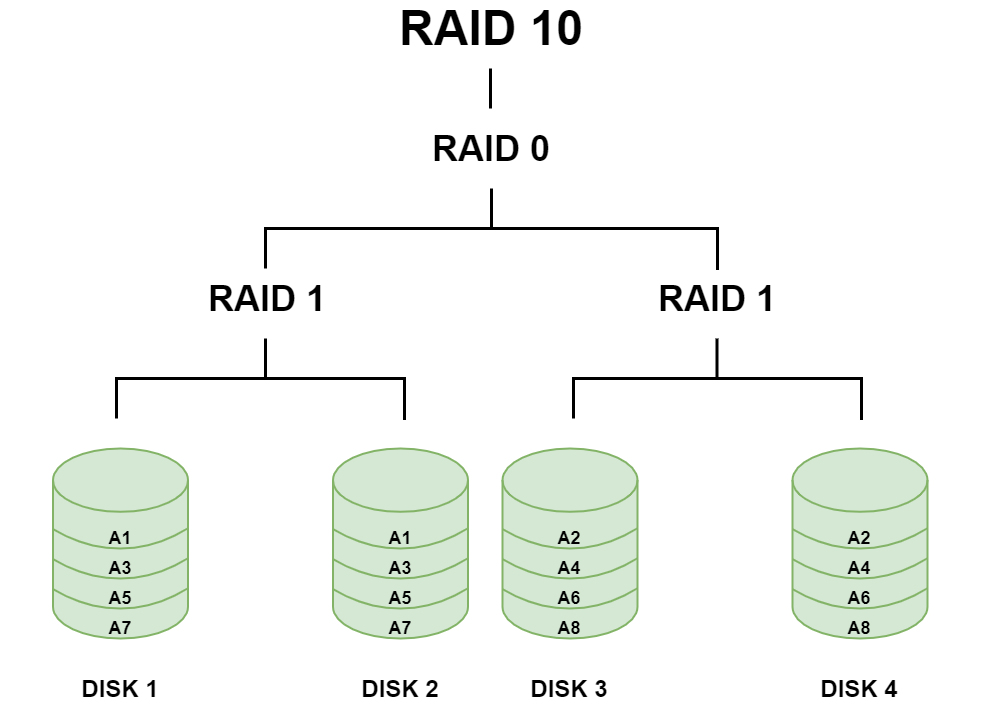

It is also important to consider data safety. With his min mind, it is better to choose server configurations with SSDs in RAID arrays. If one or more drives fail, depending on the RAID level, you can still access the data.

RAID arrays combine multiple disk devices into a single unit to improve fault tolerance and read / write speed. Choose a server with RAID1 or RAID10 arrays. Remember that you can build a pair of SSDs into a RAID1 for the system itself, and a RAID1 of SATA drives for static storage.

Cost

Obviously, money is also an important factor. And a dedicated server is usually the most expensive service offered by hosting providers. You should evaluate the costs of the server and see if its advantages can recoup the expense. If you make the right choice, it will certainly be worth your investment.

If you need to take a closer look and understand whether or not it is worth investing in renting a dedicated server, you can try an inexpensive option for starters. For example, the prices for dedicated servers can start at under $100 per month. If you understand that there is not enough power in the lower-end server, you can upgrade at any time. Choose another processor, order more memory, get an SSD - you only have to pay the remaining difference for the rented equipment. Or just change the server configuration to a more powerful one. Hard drives with information can be physically transferred to a more powerful platform, or data transfer can be performed by systems administrators without interrupting the service at all.

Can I have a real example of choosing a configuration?

Let's consider a hypothetical project to better understand how to determine the choice of configuration.

Let's say you have an online store selling home appliances. Typically traffic is between 40,000 and 45,000 visitors per month. The site contains a catalog of about 4,000 products.

In this case, a server with 64 GB of RAM and a good processor is best – for example, a Xeon E-2286G 4.00 GHz. Choose SSDs for faster read and write speeds, for example 2 x 480 GB SSDs.

To ensure that product data is not suddenly deleted in the event of disk failure, it is better to choose a configuration with RAID 1. Then, in the event of a failure, you can restore the information and continue working.

And, of course, we shouldn't forget about backups! One or even two RAID arrays assembled in one server does not guarantee data integrity in the event of a failure of the RAID controller itself. Be sure to allocate funds for a backup system and store backups on a separate storage system. Server backup can cost as little as $10 per month.

The traffic to the online store is quite large, and clearly the site would contain a lot of HD images. Therefore, it is worth considering a bandwidth of at least 1G.

How to be sure of the reliability of the data center?

When you have decided on the configuration, an equally important question arises - choose a hosting provider and make sure that its data center meets all the necessary security requirements. After all, the reliability of the infrastructure, and hence your server, will depend on this choice as well.

To determine the reliability of a data center, there are Tier levels that take into account the most important factors of resiliency, security and efficiency. There are 4 levels in total:

Tier 1 - 99.67% uptime

Tier 2 - 99.74% uptime

Tier 3 - 99.98% uptime

Tier 3 - 99.99% uptime

Let's explain some terms a little. Fault tolerance is characterized by the way in which the functioning of a group of servers is ensured in the event of failure of one or more of them. For this, various redundancy schemes are used. N stands for the minimum number of uninterruptible power supplies that guarantee the stable operation of the protected equipment. The N + 1 scheme adds an additional node which takes over the role of the failed node during a failure. A 2N scheme means that all components are duplicated.

Distributed flows provide duplication of the cooling pipeline, communication channels in the building, and electrical equipment.

It is better that the data center level is at least Tier 3. /p>

In addition, it is worth paying attention to the factors influencing the choice of the provider: data protection.

Are there any means of protection against hacker attacks and unauthorized access to the data even in the event of a server failure?

DDoS protection. Are there ready-made tools?

Backups. Is there a backup? If the hosting provider has these services, then the customer comes first.

So, seek out offers with built-in free DDoS protection at network levels L3 / L4, as well as additional server monitoring and backup.